TL;DR

LangChain has become one of the most used Python library to interact with LLMs in less than a year, but LangChain was mostly a library for POCs as it lacked the ability to create complex and scalable applications.

Everything changed in August 2023 when they released LangChain Expression Language (LCEL), a new syntax that bridges the gap from POC to production. This article will guide you through the ins and outs of LCEL, showing you how it simplifies the creation of custom chains and why you must learn it if you are building LLM applications!

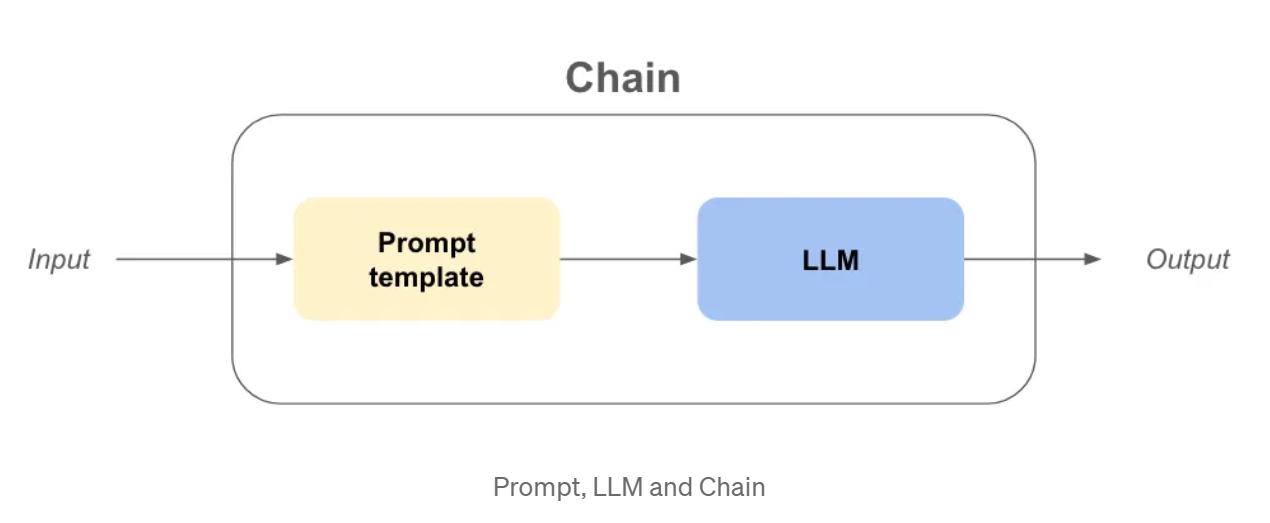

Prompts, LLM and chains, let’s refresh our memory

Before diving into the LCEL syntax, I think it is beneficial to refresh our memory on LangChain concepts such as LLM and Prompt or even a Chain.

LLM: In langchain, llm is an abstraction around the model used to make the completions such as openai gpt3.5, claude, etc…

Prompt: This is the input of the LLM object, which will ask the LLM questions and give its objectives.

Chain: This refers to a sequence of calls to an LLM, or any data processing step.

Now that definitions are out of the way, let’s suppose we want to create a company! We need a really cool and catchy name and a business model to make some money!

Example — Company name & Business model with Old Chains

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

from langchain_community.llms import OpenAI

USER_INPUT = “colorful socks”

llm = OpenAI(temperature=0)

prompt_template_product = “What is a good name for a company that makes {product}?”

company_name_chain = LLMChain(llm=llm, prompt=PromptTemplate.from_template(prompt_template_product))

company_name_output = company_name_chain(USER_INPUT)

prompt_template_business = “Give me the best business model idea for my company named: {company}”

business_model_chain = LLMChain(llm=llm, prompt=PromptTemplate.from_template(prompt_template_business))

business_model_output = business_model_chain(company_name_output[“text”])

print(company_name_output)

print(business_model_output)

>>> {‘product’: ‘colorful socks’, ‘text’: ‘Socktastic!’}

>>> {‘company’: ‘Socktastic!’,’text’: “A subscription-based service offering a monthly delivery…”}

This is quite easy to follow, we can see a bit of redundancy, but it is manageable.

Let’s add some customization by handling the cases where the user is not using our chain as expected.

Maybe the user will input something completely unrelated to the goal of our chain? In that case, we want to detect it and respond appropriately.

Example — Customization & Routing with Old Chains

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

from langchain_community.llms import OpenAI

import ast

USER_INPUT = “Harrison Chase”

llm = OpenAI(temperature=0)

# —- Same code as before

prompt_template_product = “What is a good name for a company that makes {product}?”

company_name_chain = LLMChain(llm=llm, prompt=PromptTemplate.from_template(prompt_template_product))

prompt_template_business = “Give me the best business model idea for my company named: {company}”

business_model_chain = LLMChain(llm=llm, prompt=PromptTemplate.from_template(prompt_template_business))

# —- New code

prompt_template_is_product = (

“Your goal is to find if the input of the user is a plausible product namen”

“Questions, greetings, long sentences, celebrities or other non relevant inputs are not considered productsn”

“input: {product}n”

“Answer only by ‘True’ or ‘False’ and nothing moren”

)

prompt_template_cannot_respond = (

“You cannot respond to the user input: {product}n”

“Ask the user to input the name of a product in order for you to make a company out of it.n”

)

cannot_respond_chain = LLMChain(llm=llm, prompt=PromptTemplate.from_template(prompt_template_cannot_respond))

company_name_chain = LLMChain(llm=llm, prompt=PromptTemplate.from_template(prompt_template_product))

business_model_chain = LLMChain(llm=llm, prompt=PromptTemplate.from_template(prompt_template_business))

is_a_product_chain = LLMChain(llm=llm, prompt=PromptTemplate.from_template(prompt_template_is_product))

# If we use bool on a non empty str it will be True, so we need `literal_eval`

is_a_product = ast.literal_eval(is_a_product_chain(USER_INPUT)[“text”])

if is_a_product:

company_name_output = company_name_chain(USER_INPUT)

business_model_output = business_model_chain(company_name_output[“text”])

print(business_model_output)

else:

print(cannot_respond_chain(USER_INPUT))

This becomes a bit harder to understand, let’s summarize:

There are multiple problems that start to arise:

What is LangChain Expression Language (LCEL)?

LCEL is a unified interface and syntax to write composable production ready chains, there is a lot to unpack to understand what it means.

We will first try to understand the new syntax by rewriting the chain from earlier.

Example — Company name & Business model with LCEL

from langchain_core.runnables import RunnablePassthrough

from langchain.prompts import PromptTemplate

from langchain_community.llms import OpenAI

USER_INPUT = “colorful socks”

llm = OpenAI(temperature=0)

prompt_template_product = “What is a good name for a company that makes {product}?”

prompt_template_business = “Give me the best business model idea for my company named: {company}”

chain = (

PromptTemplate.from_template(prompt_template_product)

| llm

| {‘company’: RunnablePassthrough()}

| PromptTemplate.from_template(prompt_template_business)

| llm

)

business_model_output = chain.invoke({‘product’: ‘colorful socks’})

A lot of unusual code, in just a few lines :

But is it really more composable to create chains this way ?

Let’s put it to the test by adding the is_a_product_chain() and the branching if the user input is not correct. We can even type the chain with Python Typing, let’s do this as a good practice.

Example — Customisation & Routing with LCEL

from typing import Dict

from langchain_core.runnables import RunnablePassthrough, RunnableBranch

from langchain.prompts import PromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain.output_parsers import BooleanOutputParser

from langchain_community.llms import OpenAI

USER_INPUT = “Harrrison Chase”

llm = OpenAI(temperature=0)

prompt_template_product = “What is a good name for a company that makes {product}?”

prompt_template_cannot_respond = (

“You cannot respond to the user input: {product}n”

“Ask the user to input the name of a product in order for you to make a company out of it.n”

)

prompt_template_business = “Give me the best business model idea for my company named: {company}”

prompt_template_is_product = (

“Your goal is to find if the input of the user is a plausible product namen”

“Questions, greetings, long sentences, celebrities or other non relevant inputs are not considered productsn”

“input: {product}n”

“Answer only by ‘True’ or ‘False’ and nothing moren”

)

answer_user_chain = (

PromptTemplate.from_template(prompt_template_product)

| llm

| {‘company’: RunnablePassthrough()}

| PromptTemplate.from_template(prompt_template_business)

| llm

).with_types(input_type=Dict[str, str], output_type=str)

is_product_chain = (

PromptTemplate.from_template(prompt_template_is_product)

| llm

| BooleanOutputParser(true_val=’True’, false_val=’False’)

).with_types(input_type=Dict[str, str], output_type=bool)

cannot_respond_chain = (

PromptTemplate.from_template(prompt_template_cannot_respond) | llm

).with_types(input_type=Dict[str, str], output_type=str)

full_chain = RunnableBranch(

(is_product_chain, answer_user_chain),

cannot_respond_chain

).with_types(input_type=Dict[str, str], output_type=str)

print(full_chain.invoke({‘product’: USER_INPUT}))

Let’s list the differences:

Why is LCEL better for industrialization?

If I was reading this article until this exact point, and someone asked me if I was convinced about LCEL, I would probably say no. The syntax is too different, and I can probably organize my code into functions to get almost the exact same code. But I’m here, writing this article, so there must be something more.

Out of the box invoke, stream and batch

By using LCEL your chain automatically has:

my_chain = prompt | llm

# ———invoke——— #

result_with_invoke = my_chain.invoke(“hello world!”)

# ———batch——— #

result_with_batch = my_chain.batch([“hello”, “world”, “!”])

# ———stream——— #

for chunk in my_chain.stream(“hello world!”):

print(chunk, flush=True, end=””)

When you iterate, you can use the invoke method to ease the development process. But when showing the output of your chain in a UI, you want to stream the response. You can now use the stream method without rewriting anything.

Out of the box async methods

Most of the time, the frontend and backend of your application will be separated, meaning the frontend will make a request to the backend. If you have multiple users, you might need to handle multiple request on your backend at the same time.

Since most of the code in LangChain is just waiting between API calls, we can leverage asynchronous code to improve API scalability, if you want to understand why it is important I recommend reading the concurrent burgers story of the FastAPI documentation.

There is no need to worry about the implementation, because async methods are already available if you use LCEL:

.ainvoke() / .abatch() / .astream: asynchronous versions of invoke, batch and stream.

I also recommend reading the Why use LCEL page from LangChain documentation with examples for each sync / async method.

Langchain achieved those “out of the box” features by creating a unified interface called “Runnable”. Now, to leverage LCEL fully, we need to dive into what is this new Runnable interface.

The Runnable interface

Every object we’ve used in the LCEL syntax so far are Runnables. It is a python Object created by LangChain, this object automatically inherits every feature we talked about before and a lot more. By using the LCEL syntax, we compose a new Runnable at each step, meaning that the final object created will also be a Runnable. You can learn more about the interface in the official documentation.

All the objects from the code below are either Runnable or dictionaries that are automatically converted to a Runnable :

from langchain_core.runnables import RunnablePassthrough, RunnableParallel

from langchain.prompts import PromptTemplate

from langchain_community.llms import OpenAI

chain_number_one = (

PromptTemplate.from_template(prompt_template_product)

| llm

| {‘company’: RunnablePassthrough()} # <— THIS WILL CHANGE

| PromptTemplate.from_template(prompt_template_business)

| llm

)

chain_number_two = (

PromptTemplate.from_template(prompt_template_product)

| llm

| RunnableParallel(company=RunnablePassthrough()) # <— THIS CHANGED

| PromptTemplate.from_template(prompt_template_business)

| llm

)

print(chain_number_one == chain_number_two)

>>> True

Why do we use RunnableParallel() and not simply Runnable() ?

Because, every Runnable inside a RunnableParallel is executed in parallel. This means that if you have 3 independent steps in your Runnable, they will run at the same time on different threads of your machine, improving the speed of your chain for free!

Drawbacks of LCEL

Despite its advantages, LCEL does have some potential drawbacks:

Conclusion

In conclusion, LangChain Expression Language (LCEL) is a powerful tool that brings a fresh perspective to building Python applications. Despite its unconventional syntax, I highly recommend using LCEL for the following reasons :

To Go Further…

The runnable abstraction

In some cases, I believe it’s important to understand the abstraction that LangChain has implemented to make the LCEL syntax work.

You can re-implement the basic functionalities of Runnable easily as follows:

class Runnable:

def __init__(self, func):

self.func = func

def __or__(self, other):

def chained_func(*args, **kwargs):

# self.func is on the left, other is on the right

return other(self.func(*args, **kwargs))

return Runnable(chained_func)

def __call__(self, *args, **kwargs):

return self.func(*args, **kwargs)

def add_ten(x):

return x + 10

def divide_by_two(x):

return x / 2

runnable_add_ten = Runnable(add_ten)

runnable_divide_by_two = Runnable(divide_by_two)

chain = runnable_add_ten | runnable_divide_by_two

result = chain(8) # (8+10) / 2 = 9.0 should be the answer

print(result)

>>> 9.0

A Runnable is simply a python object in which the .__or__() method has been overwritten.

In practice, LangChain has added a lot of functionalities such as converting dictionaries to Runnable, typings capabilities, configurability capabilities, and invoke, batch, stream and async methods!

So, why not give LCEL a try in your next project?

If you want to learn more, I highly recommend browsing LangChain cookbook on LCEL.

BLOG

BLOG