Article written for the Medium blog by Artefact experts in collaboration with the French Tech Corporate Community.

The abundance and diversity of responses to ChatGPT and other generative AIs, whether skeptical or enthusiastic, demonstrate the changes they’re bringing about and the impact they’re having far beyond the usual technology circles. This is in stark contrast to previous generations of AI, which were essentially predictive and generally the subject of articles or theses confined to the realm of research and innovation.

For businesses, generative AI is also different from previous artificial intelligences. If we compare them to the most similar technologies, such as natural language processing (NLP) for text corpora or computer vision for audiovisual data, generative AIs bring four major changes that companies are becoming aware of as they experiment with them.

First, compared to previous AI, generative AI significantly accelerates use case deployment in the sense that it accelerates proof of concept. Second, they open up a new realm of possibilities, enabling easier, more efficient, and less costly enhancement of unstructured data. What’s more, the results obtained with generative AI are new in terms of quality, quantity and diversity compared to the models used previously. All of these factors mean that we need to address the heightened expectations of end users, fueled by the hype surrounding this technology. We develop these four points below.

Generative AI enables faster testing of the added value of use cases

In the field of generative AI, the deployment of use cases is often faster and less labor-intensive than with previous AIs. The approach adopted with generative AI is frequently compared to assembling Legos, where pre-existing components can be combined to create new results. This ease of experimentation and implementation can enable shorter development cycles. Additionally, a conversational interaction mode with users also accelerates adoption.

A data use case can be reduced to a business problem, data, a model, and a prompt. Traditionally, creating and optimizing the model represents the most complex and time-consuming part of the process. With generative AI, this step becomes simpler. Generative AI provides pre-trained, ready-to-use models, allowing companies to benefit from advanced expertise without investing significant time in developing and refining models. In practice, models (such as Azure’s GPT 4.0) are accessible “on-demand” or can be deployed via APIs (such as Google’s Gemini Pro BARD). Some providers even offer specially fine-tuned models for specific domains, such as generating legal, medical, or financial texts.

Once the model is deployed, the only task remaining is to “ground” the generative AI model, i.e., to anchor the results generated by the model to real-world information so as to constrain the model to respond within a given perimeter. This often involves adding constraints or additional information to guide the model towards producing results that are coherent and relevant in a specific context. However, this is a far cry from the time it takes to train the AI models we’ve been using until now.

Let’s take the example of a call center verbatim analysis use case to illustrate our point. According to an Artefact study, to develop this type of use case using models based on previous AI, it generally took three to four weeks from the time the data was retrieved and made usable. Today, thanks to generative AI, this process takes just one week, an acceleration factor of more than three. The main challenge is to choose the appropriate business classification to adapt the model.

Generative AI extends the scope of AI to previously little-used or misused data

Some oil fields are only profitable when oil prices skyrocket. The same principle can be applied to data. Certain unstructured data can now be mined thanks to generative AI, opening up a whole new field of exploitable data for training or fine-tuning models, and offering numerous prospects for applications that specialize in specific domains.

And there is an emerging promise: that of generative AIs capable of handling and combining any type of data in their training processes, bypassing the time-consuming and tedious work of structuring and improving the quality of company data to make it usable. A promise not yet fulfilled, based on current observations.

Generative AI has not only benefited from a real breakthrough in attention mechanisms. It has also benefited from the ever-increasing – and necessary – power of machines.

Attention mechanisms work a bit like a person’s ability to focus on an important part of an image or text when trying to understand or create something. Imagine trying to draw a landscape from a photograph. Rather than looking at the whole picture at once, you focus on certain parts that seem important, such as mountains or trees. This helps you better understand important details and create a more accurate drawing. Similarly, attention mechanisms allow the model to focus on specific parts of an image or text when generating content. Instead of processing all the input at once, the model can focus on the most relevant and important parts to produce more accurate and meaningful results. This enables it to learn how to create images, text, or other types of content more efficiently and realistically.

Attention mechanisms parallelize very well. The use of multiple attention mechanisms provides a richer and more robust representation of data, leading to improved performance in various tasks such as machine translation, text generation, speech synthesis, image generation, and many others.

As a result, use cases that seemed impossible not long ago have now become fully accessible. This is the case, for example, with calculating speaking time in the media during presidential campaigns. Just two years ago, accurately calculating the speaking time of each candidate was a tedious operation. Today, thanks to the use of generative AI, it’s possible.

Regarding computing capabilities, six years ago, OpenAI published an analysis showing that since 2012, the amount of computation used in the most significant AI training sessions has been increasing exponentially, with a doubling time of 3.4 months (for comparison, Moore’s Law had a doubling period of two years). Since 2012, this measure has increased by over 300,000 times (a doubling period of two years would only produce a sevenfold increase).

Generative AI models often require enormous amounts of computing power for training, especially since the models are designed to be generalist and need vast quantities of content for training. Powerful computing resources, such as high-end GPUs or TPUs, are needed to process large datasets and execute complex optimization algorithms. The new NVIDIA A100 Tensor Core GPU appears to provide unprecedented acceleration. According to Nvidia, the A100 offers performance up to 20 times higher than the previous generation and can be partitioned into seven GPU instances to dynamically adapt to changing demands. It also reportedly boasts the world’s fastest memory bandwidth, with over two terabytes per second (TB/s) for running the largest models and datasets.

It should be noted that improvements in computing have been a key element in the progress of artificial intelligence. As long as this trend continues, we should be prepared for the implications of systems that far exceed today’s capabilities and that will further push the boundaries, all while weighing the value these systems bring against the costs they incur, especially in terms of energy and the environment. We will discuss these points in a future article.

Generative AI improves the diversity, quality, and quantity of the results obtained

Generative AI clearly differs from previous AI in its impact on the results generated by its models. Not only has the quantity of results generated increased, but also their quality and diversity. However, all these positive aspects must be tempered by a lower reproducibility of generative AI models.

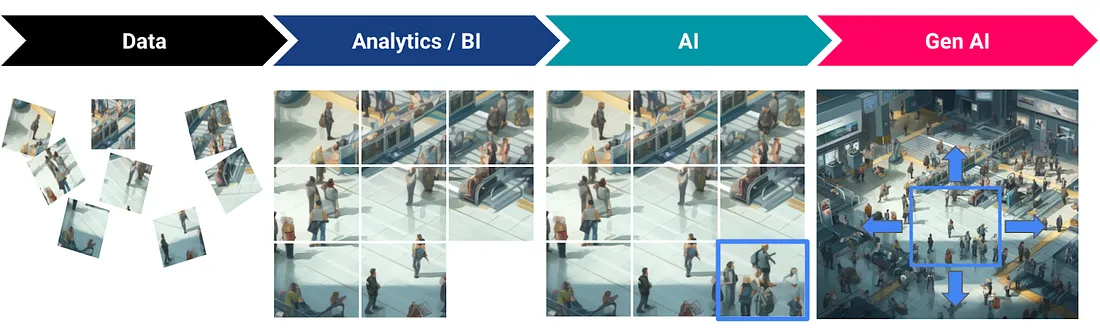

If we consider the image of a jigsaw puzzle, data analysis can be compared to its assembly, where each piece of data represents a piece to be arranged in order to reveal a coherent image. AI plays a critical role in trying to fill in the missing data by using the available information to infer and recreate those missing pieces. Generative AI goes beyond simply completing existing data by creating new data inspired by what already exists. This process expands analysis capabilities and allows new information to be discovered from existing data, bringing the generative aspect to the forefront.

Unlike previous generations of AI, which tend to produce results that are often similar, generative AI models are able to generate a greater diversity of results by exploring different variations and alternatives. This increased diversity makes it possible to generate richer, more diversified content, moving from the quantitative to the qualitative and covering a wider range of needs and preferences.

OpenAI was recently in Hollywood to showcase its latest model called “Sora,” capable of generating videos from text. “Hearing that it can do all these things is one thing, but actually seeing the capabilities was astonishing,” said Hollywood producer Mike Perry, highlighting the diversity and quality of capabilities offered by generative AI.

However, because of their ability to explore a wider space of possibilities, generative AI models can be less reproducible than previous AIs, and the accuracy of results is compromised. In concrete terms, it is more difficult to reproduce exactly the same results each time the model is run, which can pose challenges in terms of reliability and predictability in certain mission-critical applications.

This limitation constitutes a major challenge for applications of generative AI that require precise answers. And it’s an area that companies are working on in their current developments: to better specialize models in highly specific domains to improve answer accuracy, and to combine the robustness of rule-based models or queries on structured data with the ease of use and interaction with users of generative AIs by connecting these latter ones to the outputs of the former.

Heightened expectations from end users

When it comes to managing expectations and end users’ relationship to technology, generative AI presents several specific challenges. Due to its ability to produce results quickly, generative AI can raise particularly high expectations. Conversely, the occurrence of hallucinations and undesirable results can greatly undermine user trust in these solutions.

Generative AI is capable of producing results quickly and in an automated fashion, which can give end-users the impression that the technology is able to solve all their problems instantly and efficiently. This can lead to disproportionate expectations about the actual capabilities of generative AI, and disappointment if the results do not fully meet these high expectations.

Generative AI is, of course, not perfect and can sometimes produce unexpected or undesirable results, such as inconsistent, false, or inappropriate content. The occurrence of such undesirable results can lead to a loss of end user confidence in the technology, calling into question its reliability and usefulness. It can also raise concerns about data security and privacy when unexpected results compromise the integrity of information generated by generative AI.

In February 2023, Google’s chatbot Bard (renamed Gemini) provided incorrect information when asked about the discoveries of NASA’s James Webb Space Telescope. It erroneously claimed that the telescope had taken the first photos of an exoplanet. This statement is incorrect, as the first photos of an exoplanet date back to 2004, while the James Webb Telescope was only launched in 2021 (source: CNET France team, 2024).

It is thus crucial that end users of generative AI systems are aware of their limitations. Therefore, most companies deploying these solutions strive to support users in their usage: training in the art of prompting, explaining the limitations of these systems, clarifying what expectations can or cannot be met, and reminding them of the applicable rules in terms of data protection.

More than a year after the release of ChatGPT, expectations for this new technology are as high as ever. However, the value associated with it has yet to materialize in tangible use cases. In our next article, we will discuss topics related to enterprise adoption of the technology and how it is spread throughout the organization.

Under the leadership of:

BLOG

BLOG