Generative artificial intelligence (GenAI) is one of the most prevalent and rapidly evolving technologies today, with a market size that is expected to reach $42.17 billion this year, according to industry research [1]. With applications ranging from the use of chatbots and virtual assistants to predictive maintenance and fraud detection, GenAI has already had a major impact on a broad range of industries [2].

However, the rapid development of GenAI has also raised concerns about its potential risks, leading to the establishment of legal frameworks like the EU Artificial Intelligence (AI) Act [3]. This framework aims to ensure that AI solutions are developed and deployed in a safe, reliable, and ethical manner, by introducing a risk-based approach that classifies AI systems into four levels according to their potential risk: unacceptable, high, limited, and minimal [3] – to facilitate the implementation of appropriate safeguards.

Concerns about GenAI solutions stem from their reliance on user-generated data and input, which often includes sensitive user information such as first and last names, IP addresses, email accounts, phone numbers, and copyrighted content. As with any solution that handles sensitive data, this raises skepticism about personal privacy and how data is collected, processed, stored, and shared [4].

Given the risks associated with implementing GenAI and relying on user data, organizations should proactively apply Privacy by Design (PbD) principles throughout the development and deployment lifecycle of their AI solutions. This includes identifying and prioritizing the critical elements for protecting user privacy, such as obtaining user consent, data transparency and accountability, and data anonymization. By building privacy considerations into the foundations of their GenAI systems, organizations can effectively mitigate risks and foster user trust.

Adopting Privacy by Design principles: Essential when implementing GenAI solutions

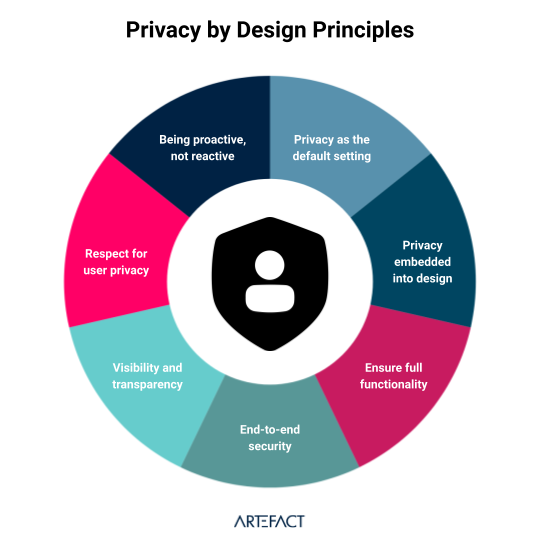

Privacy by Design (PbD) is a framework for embedding privacy into the design and development of information technologies and solutions. It was developed and published by Dr. Ann Cavoukian [5]. When used by organizations, this framework can help address user privacy concerns from the beginning of the design process, rather than trying to add privacy features retroactively.

The framework is built around the following seven fundamental principles:

Considering that GenAI solutions often collect and process large amounts of user data, PbD is becoming increasingly important for organizations that value customer trust and aim to maintain a strong reputation for adopting data privacy best practices.

Critical elements for protecting user privacy

Expanding upon the foundational principles of Privacy by Design, we have identified the critical elements for organizations that want to protect the privacy of their users’ data as they develop their GenAI solutions, or any solution that involves sensitive data. These revolve around effective data governance, which is an essential component of ensuring that PbD principles are upheld throughout the development and deployment of GenAI solutions, and includes establishing clear guidelines for collecting, processing, storing, sharing, and accessing data. To this end, the implementation of an AI data governance solution would help standardize and automate the data management process while ensuring consistent adherence to PbD principles.

Obtain user consent

Organizations should obtain explicit user consent before collecting, using, or sharing their personal data for GenAI purposes. This consent should be informed and voluntary, meaning that users should have the option to withdraw their consent at any time. In addition, organizations should regularly review and update their privacy policies and practices to ensure that they are in compliance with the latest laws and regulations. Therefore, the implementation of a Consent Management Platform (CMP) is important to make the process more efficient and centralized.

Be transparent and accountable with data usage

Transparency is about providing users with information about how their data is being used. Organizations should be transparent about how they collect, use, store, and share personal data for GenAI purposes. At the same time, they are accountable for ensuring that this data is always used in a responsible and ethical manner.

Ensure data collection and retention optimization

While organizations often seek to collect as much user data as possible, demonstrating a commitment to responsible data management and privacy principles requires a more privacy-oriented and strategic approach. This includes assessing the need for personal data for each specific GenAI application and taking steps to optimize the length of time personal data is stored and processed.

Apply data anonymization and de-identification processes

Data anonymization and de-identification are techniques that help protect users’ identities. To that end, they should be used whenever possible prior to processing personally identifiable information (PII) for GenAI purposes.

Ensure user data and security

It is important for organizations to implement security measures to protect personal data from unauthorized access, use, or disclosure. These security measures can range from the use of encryption protocols and user access management policies to conducting privacy impact assessments (PIAs) that can help identify and mitigate potential privacy risks associated with their GenAI solutions.

Integrating privacy into GenAI: An essential element for responsible innovation

Adopting Privacy by Design (PbD) principles in the development and implementation of GenAI solutions, as well as any other solution that makes use of sensitive data, reflects a proactive commitment to user privacy. This end-to-end “user-centric” approach involves integrating privacy considerations into every step of a solution’s lifecycle. By prioritizing user privacy from the outset, organizations can build strong, trust-based relationships with their users and mitigate potential reputational and legal risks over the long term.

Sources

[1] Generative AI – Worldwide, Statista

[2] The state of AI in 2023: Generative AI’s breakout year by McKinsey & Company

[3] EU Artificial Intelligence (AI) Act

[4] Generative AI is a legal minefield

[5] A. Cavoukian, “Privacy by design“, Information and Privacy Commissioner of Ontario Canada, 2009

BLOG

BLOG