An algorithm for generating synthetic rare events of all types

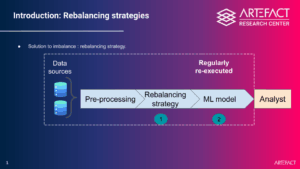

A common application of artificial intelligence is to assign a probability, or score, to people or events of interest. This scoring problem applies to many areas, such as disease detection, predictive maintenance in factories, the propensity of online visitors to make purchases, or the risk of losing subscribers. In these situations, the events of interest are greatly outnumbered by the total available data. This imbalance makes the training of machine learning models particularly complex, as they tend to focus on the majority of cases and ignore or underestimate rare cases, which poses multiple operational problems if AI is deployed. Some algorithms exist, but they are not suited to categorical data, and generally fail to improve the accuracy of the final model.

To meet this challenge, Artefact’s research center proposed a new rebalancing method for tabular data, taking into account both numerical and categorical variables. Tested on open source data, this approach shows significant improvements in terms of performance, while maintaining the consistency, plausibility, and interpretability of the data, an aspect often overlooked by existing methods. Data rebalancing requires the creation of dummy examples, which run the risk of being implausible, such as customer profiles that do not exist. This risk has a direct impact on the adoption of artificial intelligence in cases where analysts must manually validate the most likely examples pre-selected by the model. Artefact solves this problem by only creating plausible data during rebalancing, facilitating its adoption by businesses.

A turnkey research partnership with applications for Societe Generale use cases

This work is the result of a three-way partnership between the Artefact Research Center, the Sorbonne University Probability, Statistics and Modeling Laboratory (LPSM) and Societe Generale. The collaboration enabled to define a three-year research topic that strikes a balance between statistical and IT challenges and the concrete issues faced by business teams for which there are no state-of-the-art solutions. Indeed, in the case of this application, various sales experts had reported the problem of inconsistency in the banking profiles generated by existing approaches, which limited their adoption of an AI-based tool, thus raising the challenge of maintaining plausible suggestions during the rebalancing algorithm.

Through this partnership, researchers at Artefact and Sorbonne University were able to test their approaches on real bank data, which validated the statistical accuracy of the proposed algorithm. In addition, a unique element in testing the performance of the proposed method was the scaling up to millions of data points to be processed in a reasonable amount of time, thus exceeding the size of equivalent open source datasets. The code is open source and the methodology is explained in detail in the scientific article, allowing as many people as possible to use the approach for other scoring use cases.

Etienne GUIBOUT, Group Chief AI Officer at Societe Generale, explains:

“This collaboration gives Societe Generale access to complementary expertise from the academic world. It promotes innovation by incorporating a variety of perspectives aimed at identifying solutions that are increasingly tailored to our problems. Acceptance at an A-level conference is a mark of quality for Societe Generale’s teams. It demonstrates recognition of the impact of the work carried out by peers and industry experts. Participating in such events allows us to share our research, while remaining part of the ecosystem. Societe Generale’s business teams, particularly compliance, were involved in the development of this article. Their sector expertise and feedback confirmed the relevance and applicability of the content presented. This interdisciplinary collaboration ensures that the article reflects market realities and serves our needs and those of our clients first and foremost.”

Emmanuel Malherbe, Director of the Artefact Research Center:

“This is an ideal partnership for our research center, perfectly illustrating our vision of applied, useful, and shared research. Machine learning is a field that always starts with data and a real problem. Through this collaboration, we have been able to focus on the poorly resolved issue of scoring on unbalanced tabular data, which is nevertheless a recurring problem in business and raises many statistical questions. Being able to test and validate the approach on real data was also key to achieving a fast, efficient, and accurate algorithm.”

Link to the scientific paper and code of the algorithm:

- Abdoulaye Sakho, Emmanuel Malherbe, Carl-Erik Gauthier, and Erwan Scornet.

“Harnessing Mixed Features for Imbalance Data Oversampling: Application to Bank Customers Scoring.” In Joint European Conference on Machine Learning and Knowledge Discovery in Databases (2025) - https://github.com/artefactory/mgs-grf

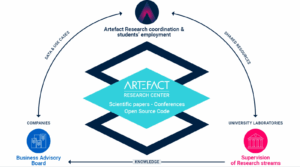

Artefact’s research center as a bridge between academia and industry

We are a team of 20 research scientists working in the fields of machine learning, computer science, and management science. We are dedicated to improving AI models, whether by making them more interpretable and controllable or by studying their use within companies. All of our work is open source, with presentations at peer-reviewed international conferences, scientific publications, white papers, and freely available code. We collaborate closely with renowned university professors. Our philosophy is to bridge the gap between industry and academia. Our research areas are inspired by real-world problems encountered in Artefact projects with our clients, and we are continually building industrial partnerships to test our methodologies on real use cases and datasets.

A crucial example concerns the explainability of statistical models. The adoption of machine learning models is hampered in many use cases because of the “black box” nature of certain models, or in other words, their lack of transparency and comprehensibility. More transparent models must therefore be proposed, while minimizing the associated degradation in predictive performance. Through the solutions it proposes, the research center is improving the adoption of AI by delivering the guarantees desired by industry.

BLOG

BLOG