TL;DR

This article introduces LLMOps, a specialised branch merging DevOps and MLOps for managing the challenges posed by Large Language Models (LLMs). LLMs, like OpenAI’s GPT, use extensive text data for tasks such as text generation and language translation. LLMOps tackles issues like customisation, API changes, data drift, model evaluation, and monitoring through tools like LangSmith, TruLens, and W&B Prompts. It ensures adaptability, evaluation, and monitoring of LLMs in real-world scenarios, offering a comprehensive solution for organisations leveraging these advanced language models.

To guide you through this discussion we will begin by revisiting the foundational principles of DevOps and MLOps, then we will focus on LLMOps, starting with a brief introduction to LLMs and their utilisation by organisations. Next, we’ll delve into the main operational challenges posed by LLM technology and how LLMOps effectively addresses them.

Foundational principles for LLMOps : DevOps and MLOps

DevOps, short for Development and Operations, is a set of practices that aim to automate the software delivery process, making it more efficient, reliable, and scalable. The core principles of DevOps include : collaboration, automation, continuous Testing, monitoring and deployment Orchestration.

MLOps, short for Machine Learning Operations, is an extension of DevOps practices specifically tailored for the lifecycle management of machine learning models. It addresses the unique challenges posed by the iterative and experimental nature of machine learning development. Introducing additional tasks like data versioning and management, as well as experimentation and model training.

LLMOps: Managing the Deployment and Maintenance of Large Language Models

LLMOps, short for Large Language Model Operations, is a specialised branch of MLOps specifically designed to handle the unique challenges and requirements of managing large language models (LLMs).

But first, what are LLMs exactly ?

LLMs are a type of deep learning model that use massive amounts of text data to estimate billions of parameters. These parameters enable LLMs to understand and generate human-quality text, translate languages, summarise complex information, and perform various natural language processing tasks.

How organisations use LLMs

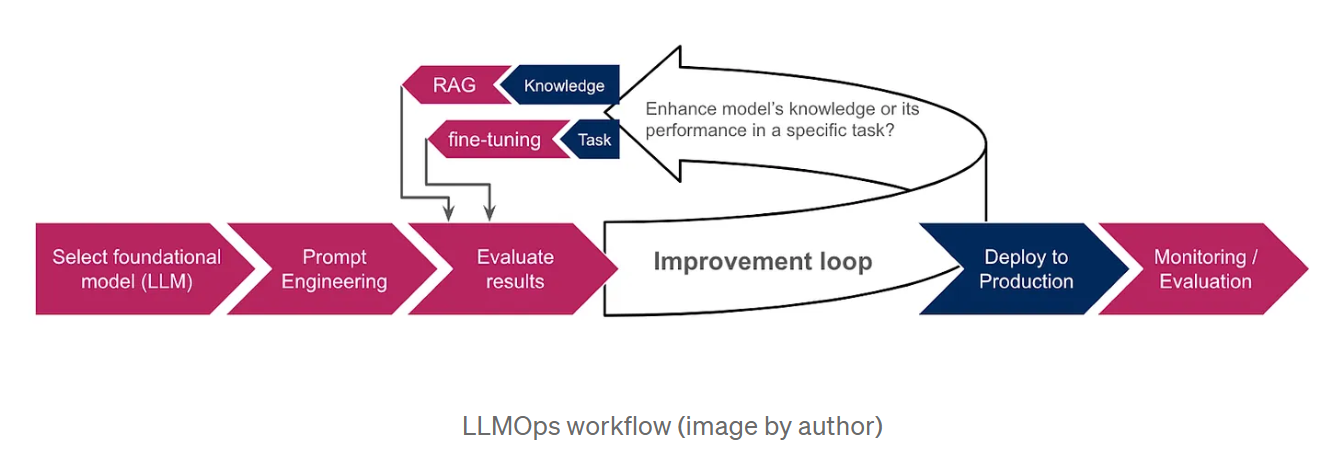

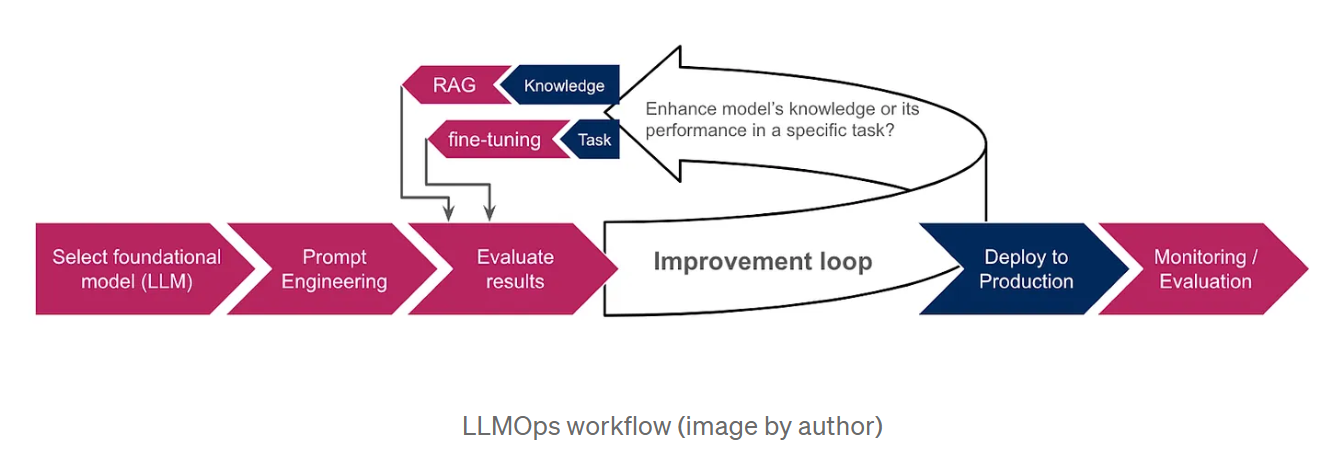

Because training LLMs from scratch is extremely expensive and time-consuming, organisations opt for pre-trained foundation models, such as OpenAI’s GPT or Google AI’s LaMDA, as a starting point. These models, being already trained on large amounts of data, possess extensive knowledge and can perform various tasks, including generating text, translating languages, and writing different kinds of creative content. To further customise the LLM’s output to specific tasks or domains, organisations employ techniques such as prompt engineering, retrieval-augmented generation (RAG) and fine-tuning. Prompt engineering involves crafting clear and concise instructions that guide the LLM toward the desired outcome, while RAG grounds the model on additional information from external data sources, enhancing its performance and relevance. Fine-tuning, on the other hand, involves adjusting the LLM’s parameters using additional data specific to the organisation’s needs. The schema below provides an overview of the LLMOps workflow, illustrating how these techniques integrate with the overall process.

Why we need LLMOps

The rapid advancements in LLM technology have brought to light several operational challenges that require specialised approaches.

Some of these challenges include :

The need for customisation: While LLMs are pre-trained on massive amounts of data, customisation is essential for optimal performance on specific tasks. This has led to the development of new customisation techniques, such as prompt engineering, retrieval-augmented generation (RAG) and fine-tuning. RAG helps ground the model on the most accurate information by providing it with an external knowledge base, while fine-tuning is more suitable when we want the model to perform specific tasks, or adhere to a particular response format such as JSON or SQL. The choice between RAG and fine-tuning depends on whether we aim to enhance the model’s knowledge or improve its performance in a specific task.

API changes: Unlike traditional ML models, LLMs are often accessed through third party APIs, which can be modified or even deprecated, necessitating continuous monitoring and adaptation. For instance, Open AI’s documentation explicitly mentions that their models are subject to regular updates, which may require users to update their software or migrate to newer models or endpoints.

Data drift, refers to a shift in the statistical properties of input data, frequently occuring in production when the encountered data deviates from the data the LLMs were trained on. This can lead to the generation of inaccurate or outdated information. For instance, with the GPT-3.5 model, its information was limited up until September 2021 before the cutoff date was extended to January 2022. Consequently, it couldn’t address questions about more recent events, leading to user frustration.

Model evaluation: In traditional machine learning, we rely on metrics like acccuracy, precision, and recall to assess our models. However, evaluating LLMs is significantly more intricate, especially in the absence of ground truth data and when dealing with natural language outputs rather than numerical values.

Monitoring: Continuous monitoring of LLMs and LLM based applications is crucial. It’s also more complicated because it involves multiple aspects that need to be considered to ensure the overall effectiveness and reliability of these language models. We’ll discuss these aspects in more detail in the next section.

How LLMOps addresses these challenges

LLMOps builds upon the foundation of MLOps while introducing specialised components tailored for LLMs :

Prompt engineering and fine-tuning management: LLMOps provides tools like prompt version control systems to track and manage different versions of prompts. It also integrates with fine-tuning frameworks to automate and optimise the fine-tuning process. A prominent example of these tools is LangSmith, a framework specifically designed for managing LLM workflows. Its comprehensive features encompass prompt versioning, enabling controlled experimentation and reproducibility. Additionnally, LangSmith facilitates fine-tuning of LLMs using runs’data after eventual filtering and enrichment to enhance model performance.

API change management: LLMOps establishes processes for monitoring API changes, alerting operators to potential disruptions, and enabling rollbacks if necessary.

Model adaptation to changing data: LLMOps facilitates the adaptation of LLMs to evolving data landscapes, ensuring that models remain relevant and performant as data patterns shift. This could be achieved by monitoring data distributions and triggering adaptation processes when significant changes are detected. These processes may include:

-> Retraining or fine-tuning: Depending on the extent of data drift and the available resources, either retraining or fine-tuning can be employed to mitigate its impact.

-> Domain adaptation: Fine-tuning the LLM on a dataset from the target domain.

-> Knowledge distillation: Training a smaller model by leveraging the knowledge and expertise of a larger, more powerful, uptodate model.

LLM-specific evaluation: LLMOps employs new evaluation tools adapted to LLMs. These include:

-> Text-based metrics, such as perplexity; a statistical measure of how well the model is able to predict the next word in a sequence. As well as BLEU and ROUGE metrics, which compare machine-generated text to one or more reference texts generated by humans. They are commonly used for translation and summarisation tasks.

-> Analysing embeddings (vector representations for words or phrases), to assess the ability of the model to understand context specific words and capture semantic similarities. Visualisation and clustering techniques can help us in bias detection as well.

-> Evaluator LLMs: Using other LLMs to evaluate our model. For instance, this can be done by attributing a score to the output of the evaluated model based on predefined metrics, such as fluency, coherence, relevance, and factual accuracy.

-> Human feedback integration: LLMOps incorporates mechanisms for gathering and incorporating human feedback into the ML lifecycle, improving LLM performance and addressing biases.

TruLens is a tool that enables integration of these evaluations into LLM applications through a programmatic approach known as Feedback Functions.

LLM-specific monitoring: LLMOps integrates continuous monitoring to track LLM performance metrics, identify potential issues, and detect concept drift or bias. This includes:

-> Functional monitoring; by tracking the number of requests, response time, token usage, error rates and cost.

-> Prompt monitoring; to ensure readability and to detect toxicity and other forms of abuse. W&B Prompts is a set of tools designed for monitoring LLM-based applications. It can be used to analyse the inputs and outputs of your LLMs, view the intermediate results and securely store and manage your prompts.

-> Response monitoring; to guarantee the model’s relevance and consistency. This includes preventing the generation of hallucinatory or fictional content, as well as ensuring the exclusion of harmful or inappropriate material. Transparency can helps us better understand the model’s response. It can be established by revealing answer souces (in RAG) or prompting the model to justify its reasoning (chain of thought).

This monitoring data can be utilised to enhance operational efficiency. We can improve cost management by implementing alerts on token usage and employing strategies such as caching previous responses. This allows us to reuse them for similar queries without invoking the LLM again. Additionally, we can minimise latency by opting for smaller models whenever feasible and constraining the number of generated tokens.

Conclusion

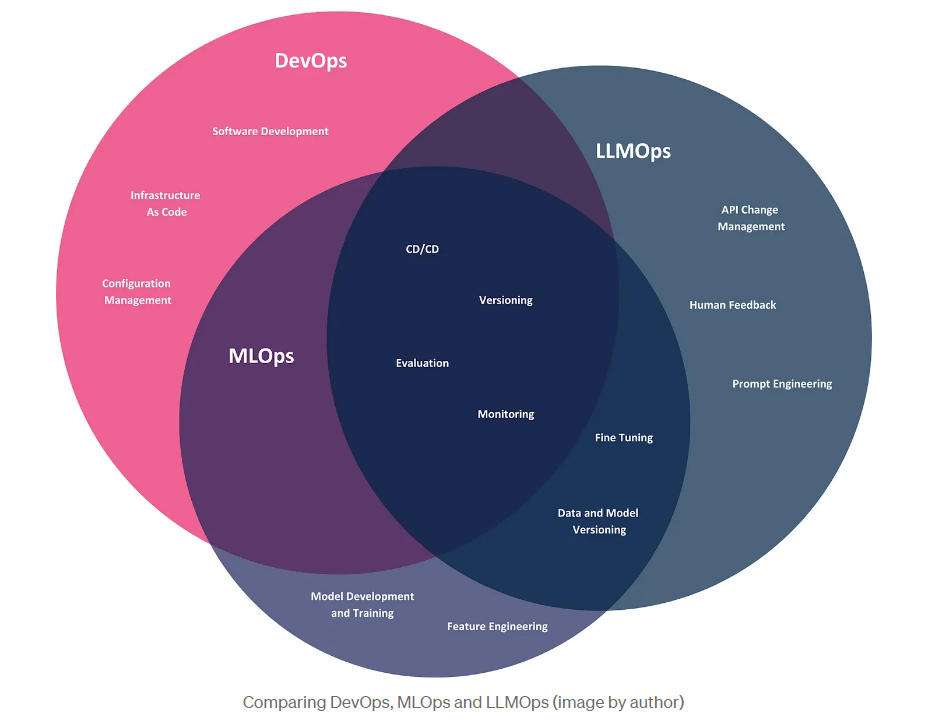

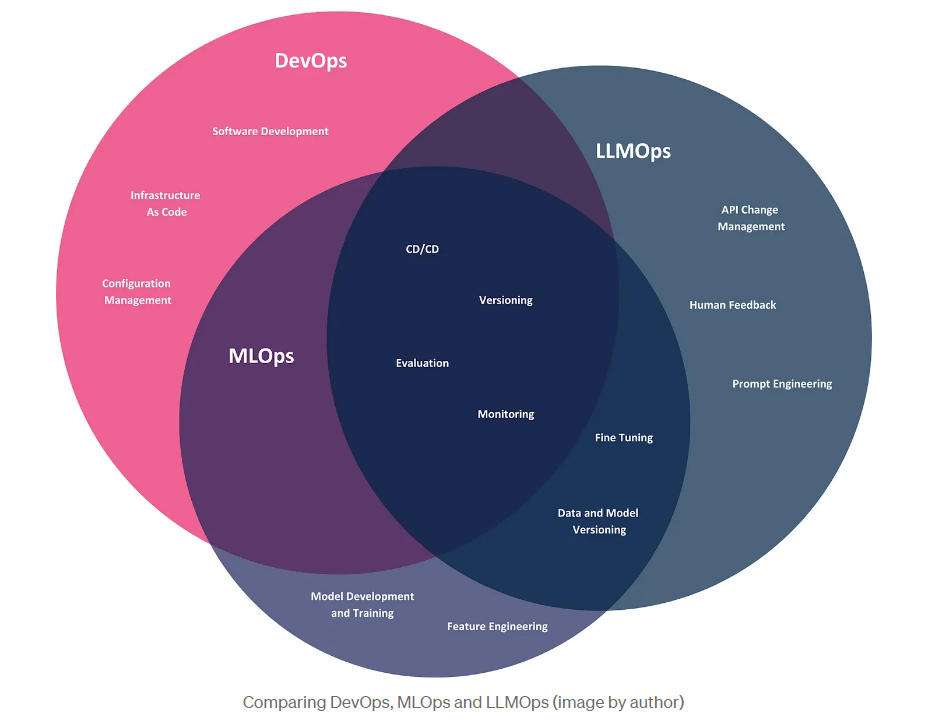

In this article, we explored the emergence of LLMOps, a descendant of DevOps and MLOps, specifically designed to address the operational challenges posed by large language models. Let’s conclude with a visual comparison of these three methodologies, illustrating their scope within the context of LLM user companies, who leverage these models to create products and solve business problems.

While the three methodologies share common practices like CI/CD, versioning, and evaluation, they each have distinct areas of focus. DevOps spans the entire software development lifecycle, from development to deployment and maintenance. MLOps extends DevOps to address the specific challenges of machine learning models, including automating model training, deployment, and monitoring. LLMOps, the latest iteration of these methodologies, focuses specifically on LLMs. Even though LLM user companies don’t need to develop their own models, they still face operational challenges, including managing API changes and customising models through techniques such as prompt engineering and fine-tuning.

BLOG

BLOG