Auriau, Vincent, Ali Aouad, Antoine Désir, und Emmanuel Malherbe. "Choice-Learn: Large-scale choice modeling for operational contexts through the lens of machine learning". Journal of Open Source Software 9, no. 101 (2024): 6899.

Einführung

Modelle der diskreten Auswahl zielen darauf ab, die Wahlentscheidungen von Einzelpersonen aus einem Menü von Alternativen, dem so genannten Sortiment, vorherzusagen. Bekannte Anwendungsfälle sind die Vorhersage der Verkehrsmittelwahl eines Pendlers oder der Einkäufe eines Kunden. Wahlmodelle sind in der Lage, mit Sortimentsvariationen umzugehen, wenn einige Alternativen nicht mehr verfügbar sind oder wenn sich ihre Eigenschaften in verschiedenen Kontexten ändern. Diese Anpassungsfähigkeit an verschiedene Szenarien ermöglicht es, diese Modelle als Input für Optimierungsprobleme zu verwenden, einschließlich Sortimentsplanung oder Preisgestaltung.

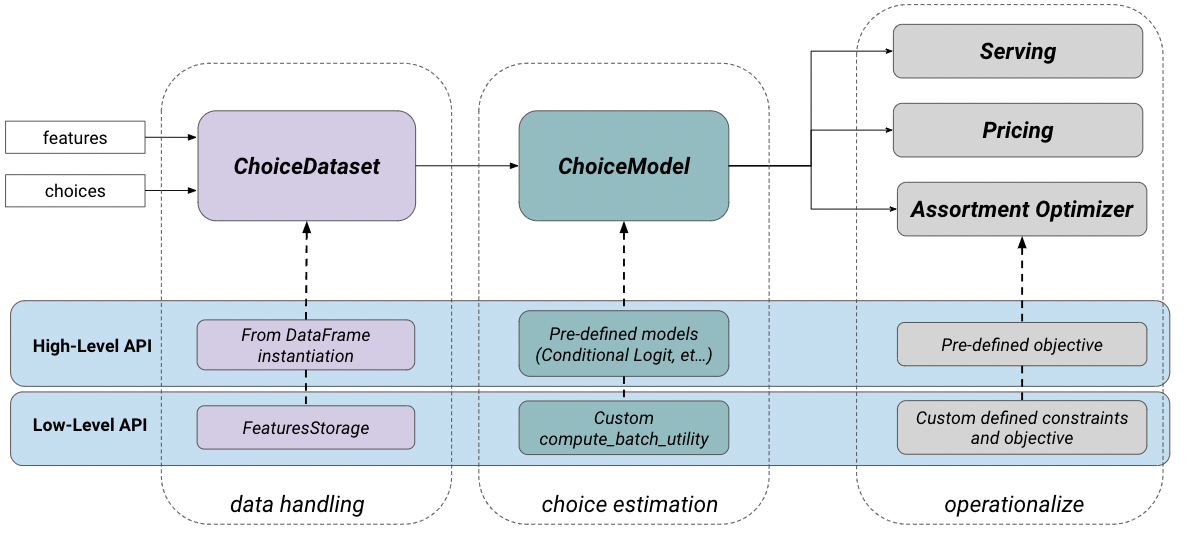

Choice-Learn bietet eine modulare Suite von Wahlmodellierungswerkzeugen für Praktiker und akademische Forscher, um die Wahl data zu verarbeiten und dann Wahlmodelle zu formulieren, zu schätzen und zu operationalisieren. Die Bibliothek ist in zwei Nutzungsebenen unterteilt, wie in Abbildung 1 dargestellt. Die höhere Ebene ist für eine schnelle und einfache Implementierung konzipiert, während die niedrigere Ebene fortgeschrittenere Parametrisierungen ermöglicht. Diese Struktur, inspiriert von den verschiedenen Endpunkten von Keras (Chollet et al., 2015), ermöglicht eine benutzerfreundliche Schnittstelle. Choice-Learn wurde mit den folgenden Zielen entwickelt:

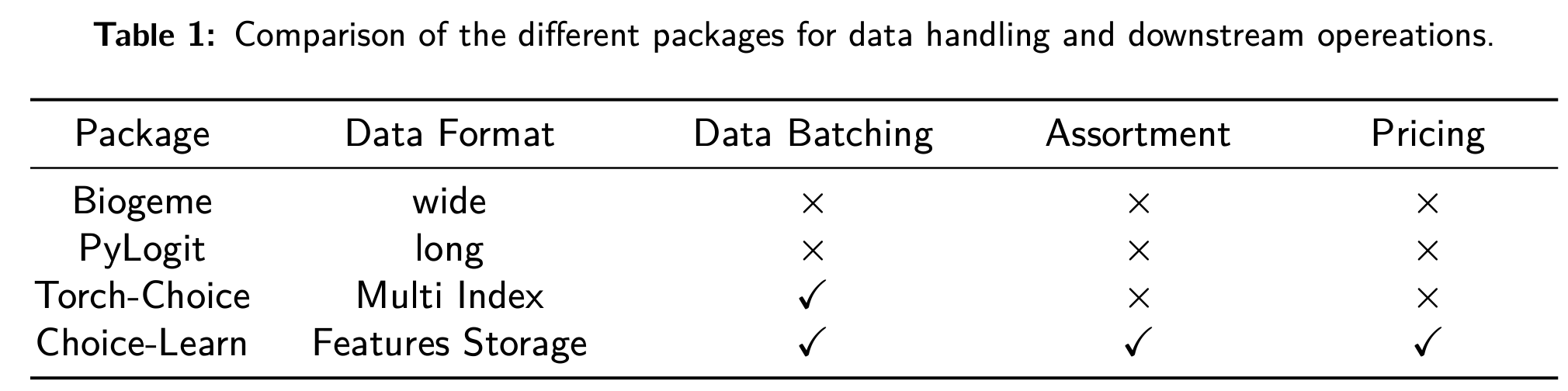

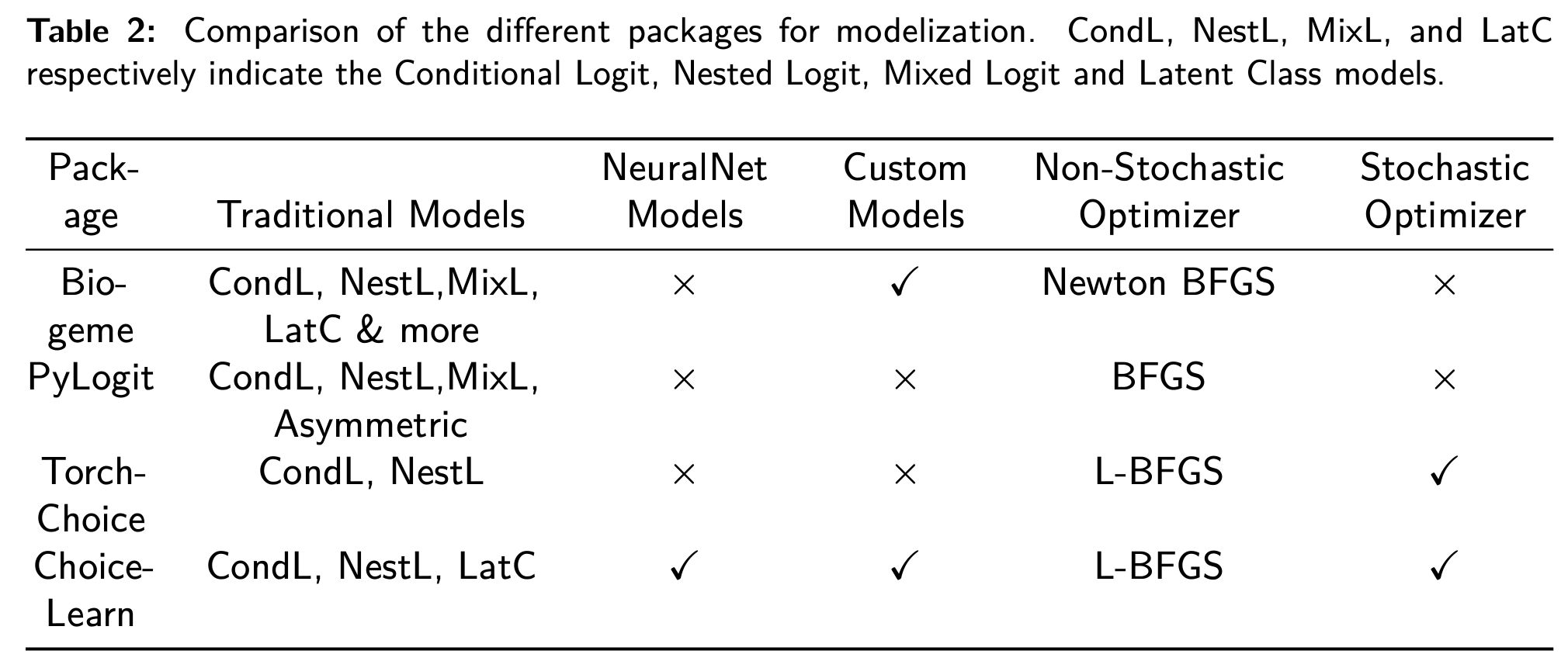

Die wichtigsten Beiträge sind in den Tabellen 1 und 2 zusammengefasst.

Erklärung des Bedarfs

Data und Skalierbarkeit des Modells

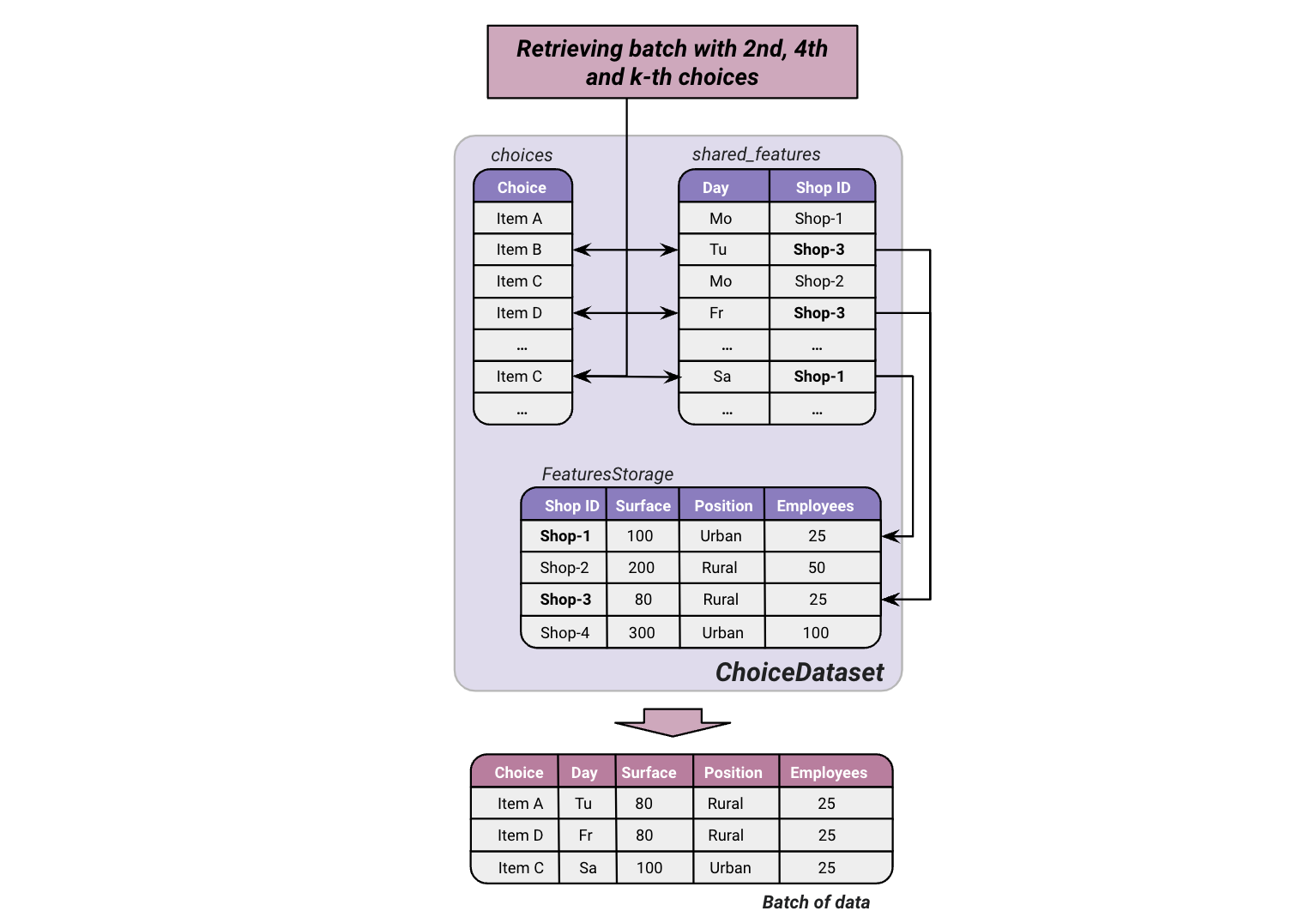

Die Verwaltung von Choice-Learn data stützt sich auf NumPy (Harris et al., 2020) mit dem Ziel, den Speicherbedarf zu begrenzen. Es minimiert die Wiederholung von Elementen oder Kundenmerkmalen und verschiebt die Verknüpfung der vollständigen data Struktur bis zur Verarbeitung von Stapeln von data. Das Paket führt das in Abbildung 2 dargestellte FeaturesStorage-Objekt ein, mit dem Merkmalswerte nur über ihre ID referenziert werden können. Diese Werte werden während des Stapelverarbeitungsprozesses spontan durch den ID-Platzhalter ersetzt. Zum Beispiel sind Supermarkt-Features wie Oberfläche oder Position oft stationär. Daher können sie in einer Hilfsstruktur data gespeichert werden, und im Hauptdatensatz wird der Speicher, in dem die Auswahl aufgezeichnet wird, nur mit seiner ID referenziert.

Das Paket basiert auf Tensorflow (Abadi et al., 2015) für die Modellschätzung und bietet die Möglichkeit, schnelle Quasi-Newton-Optimierungsalgorithmen wie L-BFGS (Nocedal & Wright, 2006) sowie verschiedene Gradientenabstiegsoptimierer (Kingma & Ba, 2017; Tieleman & Hinton, 2012) zu verwenden, die auf die Verarbeitung von Stapeln von data spezialisiert sind. Schließlich gewährleistet das TensorFlow-Backbone eine effiziente Nutzung in einer Produktionsumgebung, zum Beispiel innerhalb einer Sortimentsempfehlungssoftware, durch Bereitstellungs- und Serving-Tools wie TFLite und TFServing.

Flexible Nutzung: Vom linearen Nutzen bis zur kundenspezifischen Spezifikation

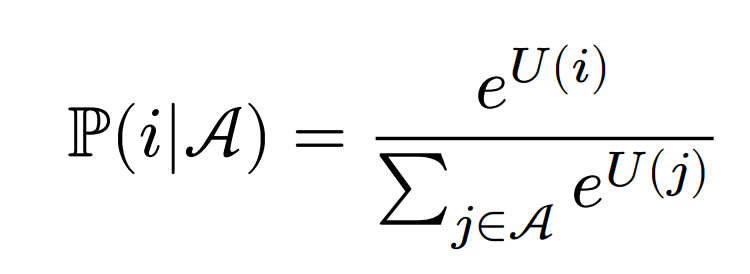

Wahlmodelle, die dem Prinzip der zufälligen Nutzenmaximierung folgen (McFadden & Train, 2000), definieren den Nutzen einer Alternative 𝑖 ∈ 𝒜 als die Summe eines deterministischen Teils 𝑈 (𝑖) und eines zufälligen Fehlers 𝜖𝑖. Wenn die Terme (𝜖𝑖)𝑖∈𝒜 als unabhängig und Gumbel-verteilt angenommen werden, kann die Wahrscheinlichkeit, die Alternative 𝑖 zu wählen, als Softmax-Normalisierung über die verfügbaren Alternativen 𝑗 ∈ 𝒜 geschrieben werden:

Die Aufgabe des Wahlmodellierers ist es, je nach Kontext eine geeignete Nutzenfunktion 𝑈 (.) zu formulieren. In Choice-Learn kann der Benutzer vordefinierte Modelle parametrisieren oder eine benutzerdefinierte Nutzenfunktion frei angeben. Um ein benutzerdefiniertes Modell zu deklarieren, muss man die Klasse ChoiceModel erben und die Methode compute_batch_utility überschreiben, wie in der Dokumentation gezeigt.

Bibliothek mit traditionellen Zufallsnutzenmodellen und auf maschinellem Lernen basierenden Modellen

Traditionelle parametrische Wahlmodelle, einschließlich des Conditional Logit (Train et al., 1987), spezifizieren die Nutzenfunktion häufig in linearer Form. Dies liefert interpretierbare Koeffizienten, schränkt aber die Vorhersagekraft des Modells ein. Neuere Arbeiten schlagen die Schätzung komplexerer Modelle vor, mit Ansätzen neuronaler Netze (Aouad & Désir, 2022; Han et al., 2022) und baumbasierten Modellen (Aouad et al., 2023; Salvadé & Hillel, 2024). Während bestehende Wahlbibliotheken (Bierlaire, 2023; Brathwaite & Walker, 2018; Du et al., 2023) oft nicht für die Integration solcher auf maschinellem Lernen basierenden Ansätze ausgelegt sind, schlägt Choice-Learn eine Sammlung vor, die beide Arten von Modellen umfasst.

Nachgelagerte Tätigkeiten: Sortiments- und Preisoptimierung

Choice-Learn bietet zusätzliche Werkzeuge für nachgelagerte Operationen, die normalerweise nicht in Bibliotheken zur Wahlmodellierung integriert sind. Insbesondere die Sortimentsoptimierung ist ein gängiger Anwendungsfall, bei dem ein Choice-Modell eingesetzt wird, um die optimale Teilmenge von Alternativen zu bestimmen, die den Kunden angeboten werden kann, um ein bestimmtes Ziel zu maximieren, z. B. den erwarteten Umsatz, die Konversionsrate oder das soziale Wohlergehen. Dieser Rahmen eignet sich für eine Vielzahl von Anwendungen wie Sortimentsplanung, Optimierung von Ausstellungsstandorten und Preisgestaltung. Wir bieten Implementierungen an, die auf der in (Méndez-Díaz et al., 2014) beschriebenen gemischt-ganzzahligen Programmierung basieren, mit der Option, den Solver zwischen Gurobi (Gurobi Optimization, LLC, 2023) und OR-Tools (Perron & Furnon, 2024) zu wählen.

Speichernutzung: eine Fallstudie

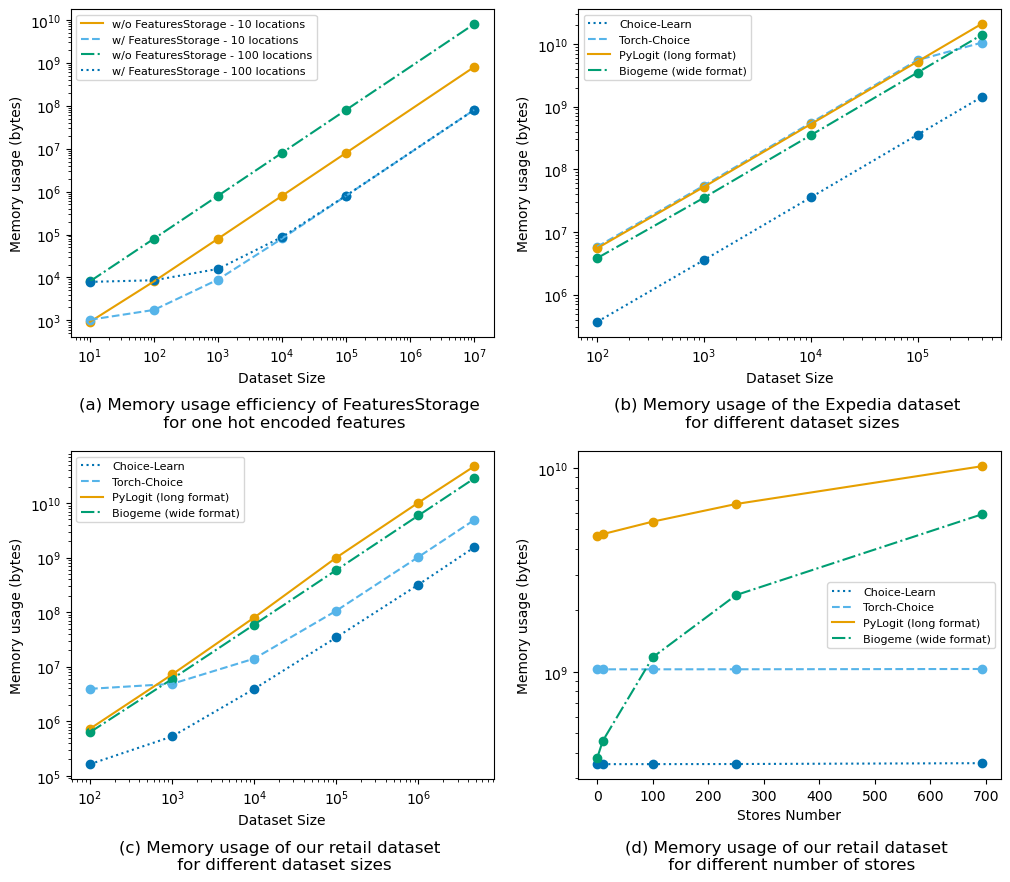

In Abbildung 3 (a) sind numerische Beispiele für die Speichernutzung dargestellt, um die Effizienz von FeaturesStorage zu verdeutlichen. Wir betrachten ein Merkmal, das in einem Datensatz wiederholt wird, z. B. eine One-Hot-Codierung für Orte, dargestellt durch eine Matrix der Form (#locations, #locations), wobei sich jede Zeile auf

auf einen Ort bezieht.

Wir vergleichen vier Methoden zur Handhabung von data auf dem Expedia-Datensatz (Ben Hamner et al., 2013): pandas.DataFrames (The pandas development team, 2020) im langen und breiten Format, die beide in den Wahlmodellierungspaketen Torch-Choice und Choice-Learn verwendet werden. Abbildung 3 (b) zeigt die

Ergebnisse für verschiedene Stichprobengrößen.

In Abbildung 3 (c) und (d) schließlich beobachten wir Speicherverbrauchsgewinne bei einem proprietären Datensatz im stationären Einzelhandel, der aus der Aggregation von mehr als 4 Milliarden Einkäufen in Konzum-Supermärkten in Kroatien besteht. Der Datensatz konzentriert sich auf die Unterkategorie Kaffee und spezifiziert für jeden Einkauf, welche Produkte verfügbar waren, deren Preise sowie eine One-Hot-Darstellung des Supermarktes.

BLOG

BLOG