Eine Schritt-für-Schritt-Anleitung zur Erfassung und Vorverarbeitung von Satellitenbildern data für Algorithmen des maschinellen Lernens

Dieser Artikel ist der erste Teil einer zweiteiligen Serie, in der die Verwendung von Algorithmen des maschinellen Lernens bei Satellitenbildern untersucht wird. In diesem Teil werden wir uns auf die Sammlung und die Vorverarbeitung von data konzentrieren, während der zweite Teil die Verwendung verschiedener Algorithmen für maschinelles Lernen vorstellen wird.

TL;DR

Dieser Artikel wird:

Wir werden die Erkennung und Klassifizierung von landwirtschaftlichen Feldern als Beispiel verwenden. Python und jupyter notebook werden für die Vorverarbeitungsschritte verwendet. Das jupyter notebook zum Nachvollziehen der Schritte ist auf github verfügbar.

Dieser Artikel setzt grundlegende Kenntnisse in data science und python voraus.

Das maschinelle Sehen ist ein faszinierender Zweig des maschinellen Lernens, der sich in den letzten Jahren schnell entwickelt hat. Mit dem öffentlichen Zugang zu vielen Standard-Algorithmus-Bibliotheken(Tensorflow, OpenCv, Pytorch, Fastai...) sowie Open-Source-Datensätzen(CIFAR-10, Imagenet, IMDB...) ist es für einen data Wissenschaftler sehr einfach, praktische Erfahrungen in diesem Bereich zu sammeln.

Aber wussten Sie, dass Sie auch auf Open-Source-Satellitenbilder zugreifen können? Diese Art von Bildmaterial kann in einer Vielzahl von Anwendungsfällen (Landwirtschaft, Logistik, Energie ...) verwendet werden. Allerdings stellen sie aufgrund ihrer Größe und Komplexität zusätzliche Herausforderungen für einen data Wissenschaftler dar. Ich habe diesen Artikel geschrieben, um einige meiner wichtigsten Erkenntnisse aus der Arbeit mit diesen Bildern mitzuteilen.

Schritt 1 - Wählen Sie die über Satellit geöffnete Quelle data

Zunächst müssen Sie den Satelliten auswählen, den Sie verwenden möchten. Die wichtigsten Merkmale, auf die Sie achten sollten, sind:

Hier sind Satelliten, deren data kostenlos zur Verfügung stehen:

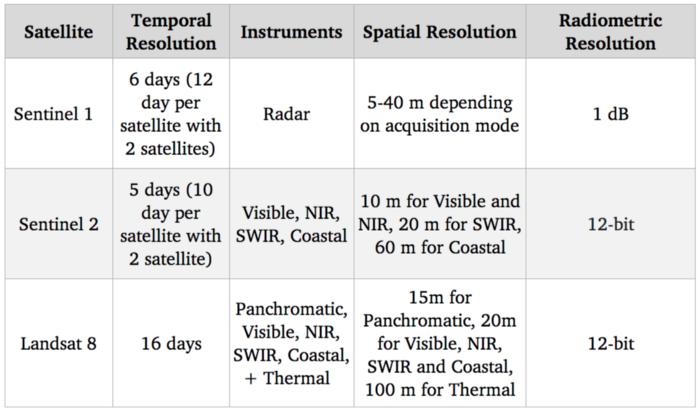

Benchmarking von Satellitensystemen

Da unser Ziel darin besteht, landwirtschaftliche Nutzpflanzen zu erkennen und zu identifizieren, dürfte das sichtbare Spektrum (das zur Erstellung von Standardfarbbildern verwendet wird) recht nützlich sein. Außerdem gibt es Korrelationen zwischen dem Stickstoffgehalt von Pflanzen und den NIR-Spektralbändern (Nahinfrarot). Was die freien Optionen angeht, so bietet Sentinel 2 mit 10 m für diese Spektralbänder derzeit die beste Auflösung, so dass wir diesen Satelliten verwenden werden.

Natürlich ist die Auflösung von 10 m im Vergleich zum Stand der Technik bei Satellitenbildern viel geringer, da kommerzielle Satelliten eine Auflösung von 30 cm erreichen. Der Zugang zu kommerziellen Satelliten data kann viele Anwendungsfälle erschließen, aber trotz des zunehmenden Wettbewerbs durch neue Anbieter, die in diesen Markt eintreten, sind sie nach wie vor hochpreisig und daher schwer in einen skalierten Anwendungsfall zu integrieren.

Schritt 2 - Sammeln der Satellitenbilder data von Sentinel 2

Nachdem wir nun unsere Satellitenquelle ausgewählt haben, besteht der nächste Schritt darin, die Bilder herunterzuladen. Sentinel 2 ist Teil des Copernicus-Programms der Europäischen Weltraumorganisation. Wir können über die Python-API von SentinelSat auf data zugreifen.

Zunächst müssen wir ein Konto beim Copernicus Open Access Hub anlegen. Alternativ können Sie auf dieser Website auch Bilder mit einer grafischen Benutzeroberfläche abfragen.

Eine Möglichkeit, die zu erkundenden Zonen zu spezifizieren, besteht darin, der API eine Geojson-Datei zur Verfügung zu stellen. Dabei handelt es sich um eine Json-Datei, die die GPS-Koordinaten (Breitengrad und Längengrad) einer Zone enthält. Geojson-Dateien können schnell auf dieser Website erstellt werden.

Auf diese Weise können Sie alle Satellitenbilder abfragen, die sich mit dem angegebenen Gebiet überschneiden.

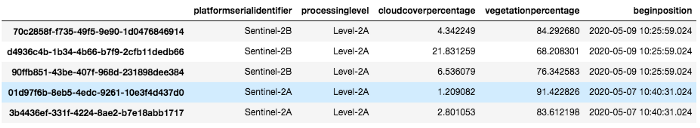

Hier werden alle Bilder aufgelistet, die für eine bestimmte Zone und Zeitspanne verfügbar sind:

api = SentinelAPI(

credentials["username"],

credentials["password"],

"https://scihub.copernicus.eu/dhus"

)

shape = geojson_to_wkt(read_geojson(geojson_path))

images = api.query(

shape,

date=(date(2020, 5, 1), date(2020, 5, 10)),

platformname="Sentinel-2″,

processinglevel="Level-2A",

Bewölkungsprozentsatz=(0, 30)

)

images_df = api.to_dataframe(images)

Beachten Sie die Verwendung von Argumenten:

bilder_df

Images_df ist ein Pandas-Datenframe mit allen Bildern, die unserer Abfrage entsprechen. Wir wählen eines mit geringer cloud aus und laden es über die API herunter:

api.download(uuid)

Schritt 3 - Verstehen, welche Spektralbänder zu verwenden sind, und Verwalten der Quantisierung

Sobald die Zip-Datei heruntergeladen ist, können wir sehen, dass sie mehrere Bilder enthält.

Das liegt daran, dass Sentinel 2, wie in Schritt 1 beschrieben, viele Spektralbänder und nicht nur Rot, Grün und Blau erfasst und jedes Band in einem einzigen Bild dargestellt wird.

Auch die Instrumente haben nicht alle die gleiche räumliche Auflösung, daher gibt es einen Unterordner für die verschiedenen Auflösungen 10, 20 und 60 Meter.

If we check the GRANULE/<image_id>/IMG_DATA folder we see that there is a subfolder for each different resolution: 10, 20 and 60 meters as the spectral bands. We are going to use those with max resolution 10m as they provide Band 2, 3, 4 and 8 which are Blue, Green, Red and NIR. Band 7, 8, 9 which are vegetation red edge (spectral bands between red and NIR that mark a transition in reflectance value for vegetation) could also be interesting for detecting crops but since they are lower resolution we are going to leave them aside now

Hier laden wir jedes Band als 2D-Numpy-Matrix:

def get_band(image_folder, band, resolution=10):

subfolder = [f for f in os.listdir(image_folder + “/GRANULE”) if f[0] == “L”][0]

image_folder_path = f”{image_folder}/GRANULE/{subfolder}/IMG_DATA/R{resolution}m”

image_files = [im for im in os.listdir(image_folder_path) if im[-4:] == “.jp2”]

selected_file = [im for im in image_files if im.split(“_”)[2] == band][0]

with rasterio.open(f”{image_folder_path}/{selected_file}”) as infile:

img = infile.read(1)

return img

band_dict = {}

for band in ["B02", "B03", "B04", "B08"]:

band_dict[band] = get_band(image_folder, band, 10)

Ein schneller Test, um sicherzustellen, dass alles funktioniert, ist der Versuch, das Echtfarbbild (d.h. RGB) neu zu erstellen und es mit opencv und matplotlib anzuzeigen:

img = cv2.merge((band_dict["B04"], band_dict["B03"], band_dict["B02"]))

plt.imshow(img)

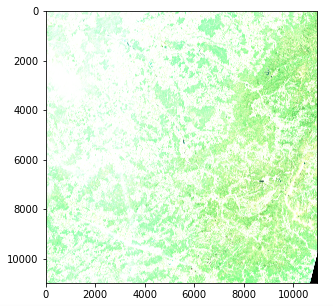

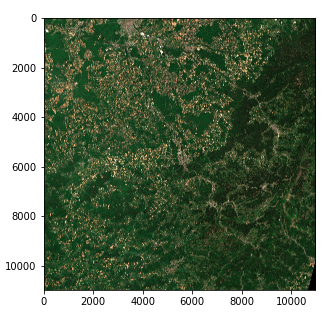

Satellitenbild - Problem der Farbintensität (Copernicus Sentinel data 2020)

Allerdings gibt es ein Problem mit der Farbintensität. Dies liegt daran, dass die Quantisierung (Anzahl der möglichen Werte) vom Standard für Matplotlib abweicht, der entweder einen 0-255 int-Wert oder einen 0-1 float-Wert annimmt. Standardbilder verwenden oft eine 8-Bit-Quantisierung (256 mögliche Werte), aber Sentinel-2-Bilder verwenden eine 12-Bit-Quantisierung und eine Nachbearbeitung konvertiert die Werte in eine 16-Bit-Ganzzahl (65536 Werte).

Wenn wir eine 16-Bit-Ganzzahl auf eine 8-Bit-Ganzzahl skalieren wollen, sollten wir theoretisch durch 256 dividieren, aber das führt zu einem sehr dunklen Bild, da nicht der gesamte Bereich möglicher Werte genutzt wird. Der Maximalwert in unserem Bild ist 16752 von 65536 und nur wenige Pixel erreichen Werte über 4000, so dass die Teilung durch 8 tatsächlich ein Bild mit einem ordentlichen Kontrast ergibt

img_processed = img / 8

img_processed = img_processed.astype(int)

plt.imshow(img_processed)

Satellitenbild - Farbintensität rechts (Copernicus Sentinel data 2020)

Wir haben nun einen 3-dimensionalen Tensor der Form (10980, 10980, 3) aus 8-Bit-Ganzzahlen in Form eines Numpy-Arrays

Beachten Sie, dass :

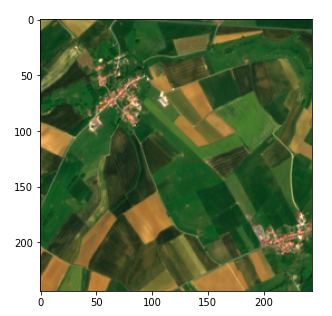

Schritt 4 - Aufteilung der Bilder in für das maschinelle Lernen geeignete Größen

Wir könnten versuchen, einen Algorithmus auf unser aktuelles Bild anzuwenden, aber in der Praxis würde das mit den meisten Techniken aufgrund der Größe des Bildes nicht funktionieren. Selbst mit hoher Rechenleistung wären die meisten Algorithmen (und insbesondere Deep Learning) vom Tisch.

Wir müssen also das Bild in Fragmente aufteilen. Eine Frage ist, wie man die Größe der Fragmente festlegt.

Wir entscheiden uns dafür, jedes Bild in ein Raster von 45 * 45 aufzuteilen (45 ist praktisch ein Teiler von 10980)

Nach der Festlegung der Parameter ist dieser Schritt mit Hilfe der Numpy-Array-Manipulation einfach zu bewerkstelligen. Wir speichern unsere Werte in einem Diktat mit (x, y) Tupeln als Schlüssel.

frag_count = 45

frag_size = int(img_processed.shape[0] / frag_count)

frag_dict = {}

for y, x in itertools.product(range(frag_count), range(frag_count)):

frag_dict[(x, y)] = img_processed[y*frag_size: (y+1)*frag_size,

x*frag_size: (x+1)*frag_size, :]

plt.imshow(frag_dict[(10, 10)])

Fragment des Satellitenbildes (Copernicus Sentinel data 2020)

Schritt 5 - Verknüpfen Sie Ihre data mit den Satellitenbildern, indem Sie GPS in UTM umwandeln

Schließlich müssen wir unsere Bilder mit den data verknüpfen, die wir über landwirtschaftliche Felder haben, um einen überwachten maschinellen Lernansatz zu ermöglichen. In unserem Fall verfügen wir über data in Form einer Liste von GPS-Koordinaten (Breitengrad, Längengrad), ähnlich dem Geojson, das wir in Schritt 2 verwendet haben, und wir möchten in der Lage sein, ihre Pixelposition zu finden. Der Übersichtlichkeit halber zeige ich zunächst die Konvertierung von Pixeln in GPS-Koordinaten und dann den umgekehrten Weg

Die bei der Abfrage der SentinelSat-API gesammelten Metadaten enthalten die GPS-Koordinaten der Bildecken (Footprint-Spalte), und wir möchten die Koordinaten eines bestimmten Pixels. Breiten- und Längengrade, bei denen es sich um Winkelwerte handelt, entwickeln sich innerhalb eines Bildes nicht linear, so dass wir kein einfaches Verhältnis anhand der Pixelposition erstellen können. Es gibt Möglichkeiten, dieses Problem mit mathematischen Gleichungen zu lösen, aber es gibt bereits fertige Lösungen in Python.

Eine schnelle Lösung besteht darin, die Pixelposition in UTM (Universal Transverse Mercator) zu konvertieren, wobei eine Position durch eine Zone sowie eine (x, y) Position (in der Einheit Meter) definiert ist, die sich linear mit dem Bild entwickelt. Wir können dann eine Konvertierung von UTM in Breitengrad/Längengrad verwenden, die in der utm-Bibliothek bereitgestellt wird. Dazu müssen wir zunächst die UTM-Zone und die Position der oberen linken Ecke des Bildes ermitteln, die in den Metadaten des Echtfarbbildes zu finden sind.

#Erhalten von Fleischdaten

transform = rasterio.open(tci_file_path, driver='JP2OpenJPEG').transform

zone_number = int(tci_file_path.split("/")[-1][1:3])

zone_letter = tci_file_path.split("/")[-1][0]

utm_x, utm_y = transform[2], transform[5]

# Umrechnung der Pixelposition in utm

east = utm_x + pixel_column * 10

Norden = utm_y + Zählpixel_Zeile * - 10

# Konvertierung von UTM in Breiten- und Längengrad

Breitengrad, Längengrad = utm.to_latlon(east, north, zone_number, zone_letter)

Die Umwandlung der GPS-Position in Pixel erfolgt über die umgekehrten Formeln. In diesem Fall müssen wir sicherstellen, dass die erhaltene Zone mit unserem Bild übereinstimmt. Dies kann durch Angabe der Zone erfolgen. Wenn unser Objekt nicht im Bild vorhanden ist, führt dies zu einem unzulässigen Pixelwert.

# Konvertierung von Breiten- und Längengraden in UTM

east, north, zone_number, zone_letter = utm.from_latlon(

Breitengrad, Längengrad, force_zone_number=zone_number

)

# Umrechnung von UTM in Spalten und Zeilen

pixe_column = round((Ost - utm_x) / 10)

pixel_row = round((north - utm_y) / -10)

Rohansicht

Schlussfolgerung

Wir sind jetzt bereit, die Satellitenbilder für das maschinelle Lernen zu nutzen!

Im nächsten Artikel dieser zweiteiligen Serie werden wir sehen, wie wir diese Bilder verwenden können, um Feldoberflächen mit Hilfe von überwachtem und unüberwachtem maschinellen Lernen zu erkennen und zu klassifizieren.

Vielen Dank für die Lektüre und zögern Sie nicht, dem Artefact tech blog zu folgen, wenn Sie benachrichtigt werden möchten, wenn der nächste Artikel erscheint!

BLOG

BLOG