Auf der KonferenzArtefact Generative AI , die am 20. April 2023 stattfand, teilten die Hauptakteure auf dem Gebiet der Generative AI ihr Wissen und tauschten Ideen über diese neue Technologie und die Möglichkeiten aus, wie Unternehmen sie zur Steigerung ihrer Geschäftsproduktivität nutzen können.

Die neuesten generativen AI Modelle sind in der Lage, anspruchsvolle Unterhaltungen mit Nutzern zu führen, scheinbar originelle Inhalte (Bilder, Audio, Text) aus ihren Trainings data zu erstellen und manuelle oder sich wiederholende Aufgaben wie das Schreiben von E-Mails, die Codierung oder die Synthese komplexer Dokumente auszuführen. Für Entscheidungsträger ist es von entscheidender Bedeutung, heute eine klare und überzeugende generative AI Strategie zu entwickeln und data Governance und der Gestaltung von AIGen Geschäftslösungen Priorität einzuräumen.

Gastgeber und Hauptredner der Konferenz:

Generative AI: neue kreative Grenzen ausloten

In seiner Einführung zur Konferenz sagte Vincent Luciani: "Die Menschen sind überall begeistert von dieser neuen Technologie und den Auswirkungen, die sie auf Unternehmen und Mitarbeiter haben wird. Bislang hatten wir auf AI relativ deterministische Anwendungen, die durch maschinelles Lernen ergänzt wurden. Wir waren in der Lage, Vorhersagen zu treffen, zu personalisieren und zu optimieren, aber nicht wirklich zu gestalten.

"Aber heute erleben wir zum ersten Mal eine echte Interaktion zwischen Mensch und Maschine. Jetzt entsteht aus dieser Technologie und diesen Algorithmen eine echte Form von Intelligenz, auch wenn die wissenschaftliche Gemeinschaft geteilter Meinung darüber ist, ob es sich um eine Revolution oder eine Evolution handelt...

"Wir haben bereits über erweiterte Menschen oder erweiterte Aktivitäten gesprochen: bald werden wir über erweiterte Unternehmen sprechen". Bevor er einen schnellen Überblick über die Themen der Konferenz gab und das Wort an die Hauptredner übergab, erinnerte er die audience daran, dass

"Trotz der ständig neuen generativen Anwendungen AI ist Zurückhaltung angesagt: Eine erfolgreiche Unternehmensumwandlung geschieht nicht über Nacht, sondern erfordert Nachdenken, Forschung und Vorbereitung."Vincent Luciani, CEO und Mitbegründer von Artefact

Perspektiven und Chancen auf dem generativen Markt AI

Der erste Hauptredner, Hanan Ouazan, begann mit einem Überblick über Textmodelle, beginnend mit Googles revolutionärem Papier "Attention is all you need" von 2017 die zur Entwicklung der Transformers führte, die die Grundlage für fast alle heute verwendeten großen Sprachmodelle (LLMs) bilden. "Wie Sie wissen, braucht Forschung Zeit, aber heute befinden wir uns in einer Phase der Beschleunigung, in der wir jeden Tag neue Modelle sehen, die von einem besseren Zugang zu data und der Infrastruktur profitieren."

Hanan untersuchte mehrere Facetten der Demokratisierung und Zugänglichkeit generativer AI Modelle und hob insbesondere die Beschleunigung der Einführung dieser Technologie hervor: "Das Tempo ist atemberaubend: ChatGPT hat in nur fünf Tagen 1 Million Nutzer erreicht."

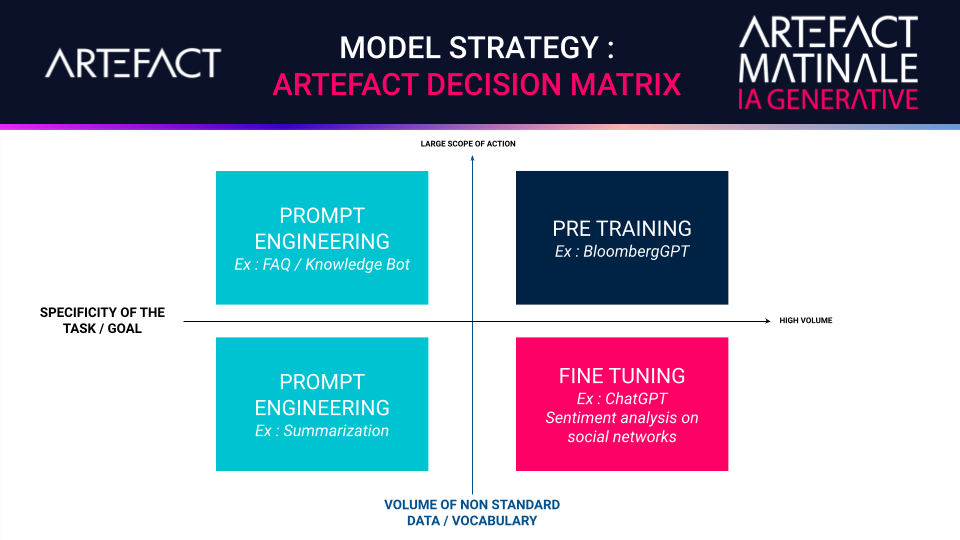

Im Hinblick auf die LLM-Trainingsmethoden-Strategien ging er auf die Vorteile von Pre-Training, Fine-Tuning und Prompt-Engineering ein, wobei er für jede Methode industrielle Anwendungsfälle nannte und die Entscheidungsmatrix für Modellstrategien von Artefactvorstellte.

Neben den Betriebskosten, der Leistung und den Einschränkungen sprach Hanan auch über das Änderungsmanagement und die möglichen Auswirkungen der generativen AI auf die Arbeitsplätze.

"Es wird sicherlich unsere Arbeitsweise verändern, aber wir von Artefact glauben nicht, dass es Berufe abschaffen wird: Es wird die Menschen, die sie ausüben, ergänzen."Hanan Ouazan, Partner Data Wissenschaft & Leitung Generative AI bei Artefact

Eine generative AI-gestützte Fotoplattform für den elektronischen Handel

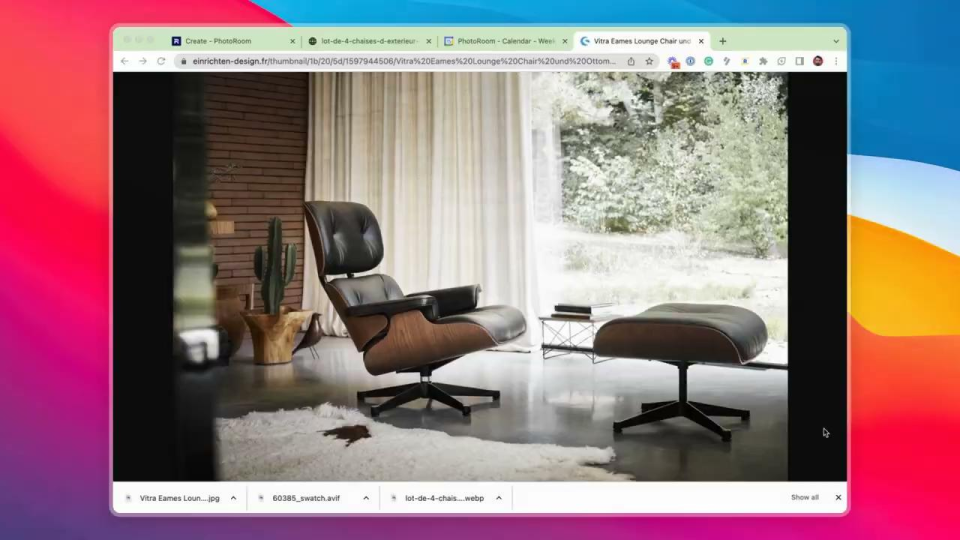

Der Hauptredner Matthieu Rouif ist CEO und Mitbegründer von PhotoRoom, einer Anwendung, die es ihren bereits 80 Millionen Nutzern ermöglicht, mit Hilfe von Stable Diffusion, einer generativen AI Technologie für Bilder, Fotos in Studioqualität mit dem Smartphone zu erstellen.

Seit der Explosion der E-Commerce-Marktplätze, die durch die COVID-19-Pandemie ausgelöst wurde, werden jedes Jahr zwei Milliarden Fotos bearbeitet. Und die PhotoRoom-App trägt einen großen Teil dazu bei, indem sie das Freistellen, die Anzeige von Schatten und die Erzeugung realistischer Hintergründe für Händler automatisiert. "Wir nutzen die generative AI , um unseren Kunden Fotos anzubieten, die aussehen, als wären sie von einem professionellen Fotografen aufgenommen worden, und fügen sogar einzigartige, realistische AI-generierte Hintergründe in weniger als einer Sekunde hinzu", sagt Matthieu.

"Wir helfen unseren Kunden, ihr Geschäft auszubauen, indem wir ihnen eine Vielzahl hochwertiger und kostengünstiger Fotos zur Verfügung stellen, die ihre Marke respektieren und ihre Produkte im bestmöglichen Licht präsentieren, um Kunden anzuziehen und zu binden.Matthieu Rouif, CEO und Mitbegründer von PhotoRoom

AI für Unternehmen überdenken

Igor Carron ist CEO und Mitbegründer von LightOn, einem französischen Unternehmen, das die neue generative AI Plattform Paradigm entwickelt hat, die leistungsfähiger als GPT-3 ist. Sie bietet die fortschrittlichsten Modelle für den Betrieb von Servern und data und garantiert gleichzeitig die data Souveränität für Unternehmen.

In seiner Grundsatzrede ging Igor auf die Ursprünge seines Unternehmens ein. "Als wir LightOn im Jahr 2016 gründeten, bauten wir Hardware, die Licht für Berechnungen für AI nutzte. Es war ein ungewöhnlicher Ansatz, aber er hat funktioniert - unsere Optical Processing Unit (OPU), der weltweit erste photonische AI Co-Prozessor, wird inzwischen von Forschern auf der ganzen Welt genutzt und in einen der größten Supercomputer der Welt integriert.

"Seit 2020, nach dem Erscheinen von GPT, haben wir daran gearbeitet, herauszufinden, wie unsere Hardware verwendet werden könnte, um unsere eigenen LLMs zu bauen - sowohl für unseren eigenen Gebrauch als auch für externe Kunden. Wir lernten, wie man LLMs herstellt und wurden sogar ziemlich gut darin. Aber als wir zum ersten Mal mit Leuten im Jahr 2021, 2022 sprachen, waren sie ahnungslos über GPT3, also mussten wir unsere Zielgruppe audience aufklären.

"Wir haben zusammen mit einem Kunden ein größeres Modell entwickelt, das kürzlich mit 40 Milliarden freigegeben wurde und auf einzigartige Weise trainiert wurde, um mit GPT3 zu konkurrieren, aber mit weit weniger Parametern. Das bedeutet, dass man eine viel weniger hardwarelastige Infrastruktur haben kann, ohne dass es einen Arm und ein Bein kosten muss."

Igor unterstreicht den Wert von großen Sprachmodellen und sagt:

"Ich denke, dass die meisten Unternehmen in Zukunft LLM-basiert sein werden... Diese Werkzeuge werden es ihnen ermöglichen, einen echten Wert aus ihren eigenen data zu generieren."Igor Carron, CEO und Mitbegründer von LightOn

"Was wir unseren Kunden heute anbieten, ist ein Produkt namens Paradigm: Es ermöglicht Unternehmen, ihre eigenen data Flows innerhalb ihrer Organisationen zu verwalten und diese data wiederzuverwenden, um diese Modelle neu zu trainieren und zu verbessern. Dadurch wird sichergestellt, dass ihre internen Prozesse oder Produkte von den Erkenntnissen profitieren können, die sie aus den Interaktionen mit ihren LLMs gewinnen.

"Viele im französischen oder europäischen Ökosystem sind von der Open AI API oder anderen nordamerikanischen Wettbewerbern abhängig." Igor warnt: "Die Gefahr, wenn Sie Ihre data an eine öffentliche API senden, besteht darin, dass sie wiederverwendet wird, um nachfolgende Modelle zu trainieren. Angenommen, Leute aus der Bergbauindustrie, die buchstäblich wissen, wo Gold zu finden ist, senden ihre technischen Daten an reports ... Wenn Sie in ein paar Jahren ChatGPT-8 oder -9 oder was auch immer fragen: 'Wo ist das Gold?', wird es Ihnen sagen, wo das Gold ist!" Er empfiehlt den Unternehmen dringend, die data , die sie intern erstellen, zum Trainieren ihrer Modelle zu verwenden.

Eine nationale Strategie für generative Artificial Intelligence

Yohann Ralle, der letzte Hauptredner, ist Spezialist für Generative AI im Ministerium für Wirtschaft, Finanzen, Industrie und digitale Souveränität in Frankreich. Er erläuterte zunächst seine "Zauberformel" für den Aufbau eines hochmodernen LLM: Rechenleistung + Datensätze + Grundlagenforschung:

"Die französische Regierung hat in einen digitalen Gemeinschaftsrechner investiert, den Supercomputer Jean Zay, der für die Gemeinschaft AI entwickelt wurde, um Rechenleistung bereitzustellen. Er hat das Training des europäischen mehrsprachigen BLOOM-Modells ermöglicht."Yohann Ralle, Spezialist für Generative AI im Ministerium für Wirtschaft, Finanzen, Industrie und digitale Souveränität in Frankreich.

"Was die Datensätze betrifft, so haben Initiativen wie Agdatahub dazu beigetragen, Lern- und Testdaten zu aggregieren, zu kommentieren und zu qualifizieren data , um effiziente und vertrauenswürdige AI zu entwickeln, die auch zur französischen Wettbewerbsfähigkeit beitragen können. Was die Grundlagenforschung betrifft, so hat die nationale Strategie dazu beigetragen, das Forschungs- und Entwicklungsökosystem AI mit der Gründung der 3IA-Institute, der Finanzierung von Doktorandenverträgen, dem Start des IRT Saint Exupéry und des SystemsX-Projekts, zahlreichen Ausbildungsprogrammen für Studenten und vielem mehr in Frankreich und Europa zu strukturieren."

Generative AI Diskussion am runden Tisch unter der Leitung von Vincent Luciani, CEO von Artefact

Hat AI den Turing-Test bestanden (d. h. hat AI menschliche Intelligenz erreicht)?

Auf diese Frage gab es zwar unterschiedliche Antworten, doch herrscht allgemeiner Konsens darüber, dass die Intelligenz zwar vorhanden ist, nicht aber die Intentionalität.

Matthieu: "Ich glaube, es hat... Man hat das Gefühl, dass jemand da ist, solange man nicht nach Daten fragt. Es gibt eine zeitliche Seite, die nicht funktioniert."

Igor: "Meine Frage ist, warum haben Sie diese Frage gestellt? Weil der Turing-Test für die Wirtschaft nicht sehr interessant ist. Aber was die Interaktion angeht, kann man sagen, dass AI den Test bestanden hat."

Yohann: "Der Turing-Test ist sehr subjektiv. Es besteht ein Risiko, wenn wir AI menschliche Eigenschaften zuschreiben, wenn wir anthropomorphisieren. Erinnern Sie sich an den Fall des Google-Ingenieurs Blake Lemoine, der glaubte, der Chatbot LaMDA, mit dem er sprach, sei empfindungsfähig geworden... Der Turing-Test ist eine interessante Übung, mehr nicht.

Hanan: "Was ChatGPT betrifft, so sind wir nahe dran, aber noch nicht am Ziel."

Ist die Einführung von ChatGPT eine Revolution, eine Evolution oder ein Teil eines Kontinuums?

Igor: "Während die langfristigen Auswirkungen von ChatGPT auf LLM noch nicht vollständig verstanden werden können, werden sich mit der Zeit neue Verwendungsmöglichkeiten für diese Technologien ergeben, die wichtige gesellschaftliche Auswirkungen haben. Die Diskussion ist interessant, aber der wichtigste Faktor sind die tatsächlichen wissenschaftlichen Arbeiten, die die Verbesserungen der LLMs im Detail beschreiben werden. Die aktuellen und potenziellen praktischen Anwendungen dieser Modelle sollten nicht übersehen oder unterschätzt werden."

Was sind die vielversprechendsten Anwendungsfälle für Unternehmen? Bots, Bilderzeugung?

Hanan: "Offensichtlich waren Chatbots schon immer wichtig für AI und werden auch weiterhin ein wichtiger Anwendungsfall sein, denn mit ChatGPT kann man jetzt innerhalb von 48 Stunden einen Chatbot einrichten, indem man ihn mit einer Datenbank verbindet. Ein weiterer Anwendungsfall ist die Schaffung von autonomen Agenten, die bestimmte Aufgaben ohne menschliches Eingreifen ausführen können, wie z. B. ein Reisebüro, das alle Tickets für Hotels und Restaurants für einen Besuch in Italien reserviert.

Yohann: "Ich sehe viele Möglichkeiten für CGT-gesteuerte Plugins, wie Kayak oder Booking. Ich denke, es wird die digitale Umgebung umstrukturieren, wobei OpenAI die Aggregatoren zusammenführen wird.

Igor: "Ich sehe eine Möglichkeit zur Anpassung von LLMs für Unternehmen. Über data hinaus werden die Unternehmen beginnen zu verstehen, wie sie unstrukturierte data nutzen können und wie sie intern mit privaten LLMs einen echten Wert aus ihren data generieren können. Gleichzeitig denke ich, dass sich die Art und Weise, wie Menschen das Internet suchen und nutzen, dank ChatGPT dramatisch verändern wird."

Vincent: Ich denke, es wird eine Verschmelzung von unternehmensinternen data und LLMs zu einer Art 'Master-FAQ+'' geben, die von Such- oder Augmented Agents abgefragt werden kann. Das Konzept der Suchanfragen entwickelt sich weiter: werden die Leute morgen ein oder mehrere Schlüsselwörter kaufen oder ein Konzept? In der Werbung war es immer das Ziel, personenbasiert zu sein, audience-basiert; jetzt, da wir die persönliche data schützen, bewegen wir uns in Richtung kontextbasiert. Und das kann zu interessanterer Werbung führen.

Wie wird der generative Ansatz AI heute in Unternehmen eingesetzt? Wirkt es sich auf die Beschäftigung aus?

Matthieu: "Wir haben das Glück, dass einer unserer Wettbewerbsvorteile darin besteht, dass wir AI in unserer DNA haben. Wir fördern intern die Verwendung von generativen Tools. Unser technisches Team verwendet Copilot für die Entwicklung, und unsere Programmierer verwenden sowohl ChatGPT als auch Copilot. Mit diesen Tools sind wir kreativer. Was die Beschäftigung angeht, so wachsen wir, also planen wir, neue Leute einzustellen... aber gleichzeitig können wir, wenn wir eine großartige Software haben, mit kleineren Teams mehr erreichen."

Igor: "Wir haben immer mit einem kleinen Team - sieben oder acht Personen - gearbeitet, um das gleiche hohe technische Niveau zu erreichen wie zum Beispiel Google, wo die Teams zehnmal größer sind. Unsere kleinen Teams haben einen völlig unproportionalen Effekt. Es ist ein Irrglaube zu glauben, dass man ein großes Team braucht, um große Dinge zu erreichen."

Yohann: "Vor zehn Jahren hieß es in einer US-Studie, dass innerhalb von 20 Jahren 47 % der Arbeitsplätze durch AI verloren gehen würden, aber wir sehen, dass das nicht der Fall ist. Laut einer neueren OECD-Studie sind es eher 14 %. Ich denke, wir sollten in Aufgaben und nicht in Arbeitsplätzen denken. Wie in einer aktuellen OpenAI-Studie erwähnt, werden 80 bis 90 % der Arbeitsplätze von der generativen AI betroffen sein - aber das bedeutet eigentlich, dass 90 % der Arbeitnehmer bei 10 % ihrer Aufgaben betroffen sein werden. Interessant ist, dass die Vorstellung von Berufen, von denen wir dachten, sie seien von AI unantastbar, in Frage gestellt wird, z. B. in der Kreativbranche, im Rechtswesen, im Finanzwesen und anderen Bereichen. Die französische Regierung hat Le LaborIA gegründet, um diese Fragen zu erforschen.

Wo liegen die Grenzen der Souveränität und der Vorschriften für diese Modelle?

Hanan: "Die erste Grenze betrifft das geistige Eigentum (IP). Heute gibt es drei Arten von Modellen: öffentliche Modelle wie ChatGPT, bei denen die von Ihnen gesendete data für kommerzielle Zwecke genutzt werden kann; private Modelle ohne Eigentümer des geistigen Eigentums, wie Googles API auf Lamba; und selbst installierte Open-Source-Modelle. Data Souveränität ist ein Thema, da GPT und PaLM nicht in europäischer, sondern in amerikanischer Hand sind."

Yohann: "Vorschriften sind ein großes Thema in Europa. Italien hat die Nutzung von ChatGPT vollständig verboten, während noch untersucht wird, ob die Anwendung mit den GDPR-Datenschutzbestimmungen übereinstimmt. OpenAI muss seine Verwendung von personenbezogenen Daten data sehr deutlich machen, indem es zum Beispiel einen Haftungsausschluss präsentiert, der besagt, dass es personenbezogene Daten verwendet data und den Nutzern die Möglichkeit gibt, sich gegen die Sammlung von data zu entscheiden und ihre Daten data zu löschen. Ein weiteres Problem im Hinblick auf die Einschränkungen von LLMs sind Halluzinationen: Sie geben oft falsche Antworten, was schwerwiegend sein kann, wenn die Anfrage eine Person des öffentlichen Lebens betrifft und das Modell eine 'Fake News'-Geschichte generiert, die der betreffenden Person echten Schaden zufügen kann."

Vincent: "Haben Sie sich mit der Frage des geistigen Eigentums und den Problemen befasst, die durch die Klage von Getty Images gegen Stability AI aufgeworfen wurden? Es gibt viele Fragen zum Scraping des Internets nach Bildern, um Modelle zu trainieren..."

Yohann: "Wir denken darüber nach. Open Source könnte ein Weg sein, um saubere, urheberrechtsfreie Datenbanken und Datensätze zu erstellen, die das geistige Eigentum respektieren."

Matthieu: "Was persönliche data und Produkte angeht: Was ChatGPT oder Midjourney oder PhotoRoom gut funktionieren lässt, ist nicht die persönliche data, sondern das Feedback der Kunden."

Yohann: "Das Feedback der Nutzer ist ideal, aber es zu sammeln, ist im Falle von LLMs unerschwinglich."

Igor: "Wo ist das Geld? Das ist meine Frage. Alle Probleme, die Sie angesprochen haben, sind technischer Natur, und wir können sie nicht lösen, solange wir nicht die Mittel haben, um Ingenieure einzustellen und ein Ökosystem aufzubauen, und darauf sind wir einfach noch nicht vorbereitet."

Glauben Sie, dass es einen GPU-Krieg" geben wird, wenn immer mehr LLMs gebaut werden?

Yohann: "Das ist ein echtes Risiko. Im Moment gibt es hier ein Monopol von NVIDIA, sie kontrollieren den Markt und die Preise. Leider gibt es in Europa keine echten Wettbewerber. Es ist per Definition eine begrenzte Ressource, eine seltene Ressource, also ist es ein ernsthafter Kampf."

Matthieu: "Die mangelnde Verfügbarkeit von Grafikprozessoren schränkt nicht nur unsere Produktivität, sondern auch das Wachstum von Unternehmen in ganz Europa stark ein."

Igor: "Da wir als Hardware-Hersteller angefangen haben, standen wir bereits 2016 vor diesem Problem... Heute gibt es Leute, die bei unseren Konkurrenten arbeiten und deren Vollzeitjob darin besteht, genügend GPUs zu finden, um Modelle zu trainieren... Der Markt explodiert, aber die Chip-Produktion kann nicht Schritt halten - überall auf der Welt."

Hanan: "Es wird unweigerlich einen GPU-Engpass geben, aber wir können lernen, effizienter zu werden, das müssen wir auch. Und wir müssen sehen, wie wir Open Source in unsere Unternehmen integrieren können, nicht nur, wie wir die neuesten Technologien nutzen können."

Wo sehen Sie den größten Wert für die Zukunft? Open-Source-Modelle? LLMs?

Matthieu: "Bei PhotoRoom nutzen wir Open Source, das erlaubt uns, schneller zu arbeiten und unsere eigene IP zu entwickeln. Wir haben eine große Hugging Face-Community in Paris, die uns wichtiges Feedback gibt".

Igor: "Wir nutzen LLMs, aber wir sind nicht an dieses Geschäftsmodell gebunden. Wir könnten Open Source verwenden. Das Wichtigste ist, dass wir in der Lage sind und wissen, wie wir unsere proprietäre data wiederverwenden können, um zukünftige Modelle zu trainieren. Das Ziel ist eine Industrie, die diese Modelle für andere Unternehmen anpasst."

Yohann: "Die Entwicklung von Open Source im Vergleich zu proprietärem Material hat die generative AI vorangetrieben. Die Gemeinschaft AI hat zusammengearbeitet, damit andere Akteure von dieser Grundlagenforschung profitieren und ihre eigenen Modelle entwickeln können. Ich bezweifle, dass die Leistung dieser Open-Source-Modelle nicht unter der von proprietären Modellen liegt, aber das kann sich ändern. Auf jeden Fall muss man sich fragen, ob Google es nicht bereut, die Türen zu seiner ChatGPT-Technologie geöffnet zu haben!"

An den runden Tisch schloss sich eine audience Frage- und Antwortrunde an, in der besonders lebhaft über den Mangel an Frauen in der Technologiebranche diskutiert wurde. Yohann erläuterte mehrere Maßnahmen der französischen Regierung im Bildungsbereich, die sich speziell an Mädchen und Frauen richten, während Vincent darüber sprach, was die Artefact School of Data, die Initiative Women@Artefact und andere Tech-Unternehmen tun, um die Situation zu verbessern.

Weitere Fragen betrafen die Inklusion bei der Nutzung von LLM durch Menschen mit Autismus und anderen Behinderungen, das Problem der AI Halluzinationen, die Maßnahmen, die Unternehmen zum Schutz der Umwelt zu ergreifen gedenken, sowie die Rolle Europas gegenüber Frankreich in Bezug auf Internet-Scraping für data. Um zu sehen, wie die Teilnehmer diese Fragen beantwortet haben, sehen Sie sich die Konferenz an replay.

BLOG

BLOG