En la ConferenciaArtefact Generative AI , celebrada el 20 de abril de 2023, los principales protagonistas del campo de la generación AI compartieron sus conocimientos e intercambiaron ideas sobre esta nueva tecnología y las formas en que las empresas pueden utilizarla para mejorar su productividad empresarial.

Los últimos modelos generativos AI son capaces de mantener conversaciones sofisticadas con los usuarios, crear contenidos aparentemente originales (imágenes, audio, texto) a partir de su formación data, y realizar tareas manuales o repetitivas como escribir correos electrónicos, codificar o sintetizar documentos complejos. Es vital que los responsables de la toma de decisiones desarrollen hoy una estrategia generativa AI clara y convincente y den prioridad a la gobernanza data y al diseño de soluciones empresariales AIGen.

Anfitriones de la conferencia y ponentes principales:

Generative AI: explorando nuevas fronteras creativas

En su introducción a la conferencia, Vincent Luciani señaló: "En todas partes hay gente entusiasmada con esta nueva tecnología y con el impacto que tendrá en las organizaciones y los empleados. Hasta ahora, lo que teníamos en AI eran aplicaciones relativamente deterministas aumentadas por el aprendizaje automático. Éramos capaces de predecir, personalizar y optimizar, pero no de crear realmente.

"Pero hoy, por primera vez, asistimos a una verdadera interacción entre el hombre y la máquina. Ahora, de esta tecnología y estos algoritmos está surgiendo una verdadera forma de inteligencia, aunque la comunidad científica esté dividida sobre la cuestión de si se trata de una revolución o de una evolución...".

"Ya hemos hablado de humanos aumentados o actividades aumentadas: pronto hablaremos de empresas aumentadas". Antes de presentar una rápida panorámica de los temas que tocaría la conferencia y ceder la palabra a los ponentes principales, recordó en Audiencia que

"A pesar de la llegada constante de nuevas aplicaciones generativas AI , la moderación es crucial: la transformación empresarial con éxito no se produce de la noche a la mañana, requiere reflexión, investigación, preparación."Vincent Luciani, Consejero Delegado y cofundador de Artefact

Perspectivas y oportunidades en el mercado generativo AI

La primera ponente principal, Hanan Ouazan, comenzó con una visión general de los modelos de texto, empezando por el revolucionario documento de Google de 2017 "Attention is all you need" de Google, que condujo a la creación de los Transformers que son la base de casi todos los grandes modelos de lenguaje (LLM) en uso hoy en día. "Como saben, la investigación lleva tiempo, pero hoy en día, estamos en un período de aceleración, donde estamos viendo nuevos modelos todos los días que capitalizan un mayor acceso a data y la infraestructura."

Hanan exploró varias facetas de la democratización y accesibilidad de los modelos generativos de AI , destacando en particular la aceleración de la adopción de la tecnología: "El ritmo es asombroso: ChatGPT alcanzó el millón de usuarios en sólo cinco días".

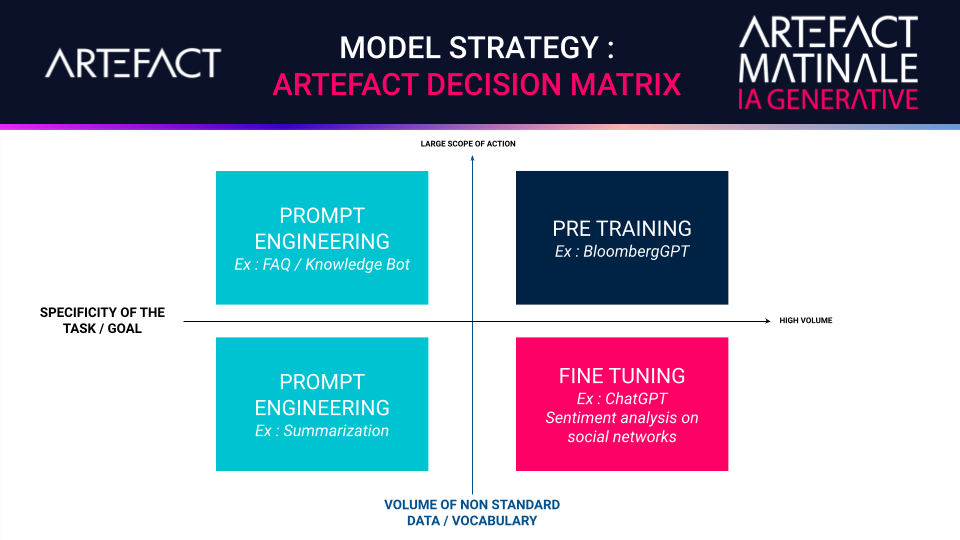

En cuanto a las estrategias del método de formación LLM, abordó las ventajas del preentrenamiento, el ajuste fino y la ingeniería rápida, citando casos de uso industrial para cada una de ellas y presentando la matriz de decisión de estrategias de modelos de Artefact.

Junto con el coste de propiedad, el rendimiento y las limitaciones, Hanan también habló de la gestión del cambio y de las formas en que la generativa AI podría repercutir en los puestos de trabajo.

"Sin duda cambiará nuestra forma de trabajar, pero en Artefact, no creemos que acabe con las profesiones: aumentará a los humanos que las ejercen".Hanan Ouazan, socia Data Science & Lead Generative AI en Artefact

Una plataforma fotográfica para el comercio electrónico basada en la tecnología Generative AI

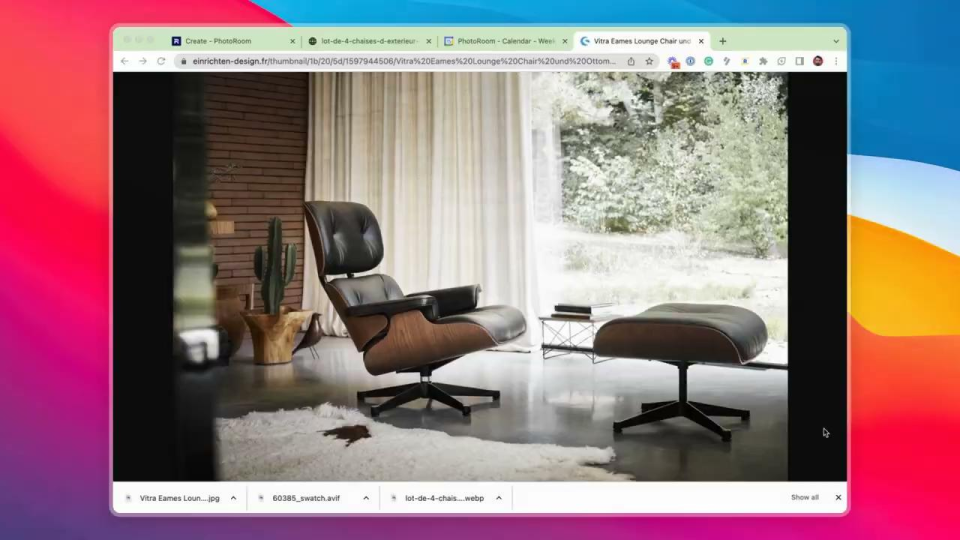

Matthieu Rouif, ponente principal, es director general y cofundador de PhotoRoom, una aplicación que permite a sus ya 80 millones de usuarios crear fotos de calidad de estudio con un smartphone, utilizando Stable Diffusion, una tecnología generativa AI para imágenes.

Desde la explosión de los mercados de comercio electrónico espoleada por la pandemia del COVID-19, cada año se editan dos mil millones de fotos. Y la aplicación PhotoRoom está desempeñando un papel importante al automatizar el recorte, la visualización de sombras y la generación de fondos realistas para los comerciantes. "Utilizamos AI generativo para ofrecer a los clientes fotos que parecen tomadas por un fotógrafo profesional, incluso añadiendo fondos únicos y realistas generados por AI en menos de un segundo", explica Matthieu.

"Ayudamos a nuestros clientes a hacer crecer sus negocios proporcionándoles fotos abundantes, de alta calidad y bajo coste que respeten su marca y presenten sus productos de la mejor manera posible para atraer y fidelizar a los clientes."Matthieu Rouif, director general y cofundador de PhotoRoom

Repensar AI para las empresas

Igor Carron es consejero delegado y cofundador de LightOn, una empresa francesa Compañia que está detrás de la nueva plataforma generativa AI Paradigm, más potente que GPT-3. Aporta los modelos más avanzados para gestionar servidores y data al tiempo que garantiza la soberanía data de las empresas.

En su discurso de apertura, Igor habló de los orígenes de su Compañia. "Cuando creamos LightOn en 2016, estábamos construyendo hardware que utilizaba la luz para realizar cálculos para AI. Era un enfoque inusual, pero funcionó: nuestra Unidad de Procesamiento Óptico (OPU), el primer coprocesador fotónico AI del mundo, ha sido utilizado ahora por investigadores de todo el mundo e integrado en uno de los mayores superordenadores del mundo".

"Desde 2020, tras la aparición de GPT, hemos trabajado para averiguar cómo se podía utilizar nuestro hardware para construir nuestros propios LLM, tanto para uso propio como para clientes externos. Aprendimos a hacer LLM y, de hecho, se nos dio bastante bien. Pero cuando hablamos por primera vez con la gente en 2021, 2022, no tenían ni idea de GPT3, así que tuvimos que educar a nuestro objetivo Audiencia.

"Trabajamos con un cliente para crear un modelo más grande, recientemente lanzado con 40.000 millones, entrenado de forma única para competir con GPT3, pero utilizando muchos menos parámetros. Esto significa que puede tener una infraestructura mucho menos pesada en cuanto a hardware, y utilizarla sin que le cueste un ojo de la cara".

Igor subraya el valor de los grandes modelos lingüísticos y afirma:

"Creo que, en el futuro, la mayoría de las empresas se basarán en LLM... Estas herramientas les permitirán generar valor real a partir de su propia data."Igor Carron, Director General y cofundador de LightOn

"Lo que hoy ofrecemos a los clientes es un producto llamado Paradigm: permite a las empresas gestionar sus propios flujos data dentro de sus organizaciones y reutilizar este data para reciclar y mejorar estos modelos. Esto garantiza que sus procesos o productos internos puedan beneficiarse de la inteligencia obtenida de sus interacciones con sus LLM.

"Muchos en el ecosistema francés o europeo dependen de la API Open AI o de otros competidores norteamericanos". advierte Igor: "El peligro de enviar tu data a una API pública es que se reutilizará para entrenar modelos sucesivos. Digamos que la gente del sector minero, que sabe literalmente dónde encontrar oro, envía su reports técnico a esa API... Dentro de unos años, si le preguntas a ChatGPT-8 o -9 o lo que sea: '¿Dónde está el oro?', ¡te dirá dónde está el oro!". Recomienda encarecidamente que las empresas empiecen a utilizar los data que generan internamente para entrenar sus modelos.

Una estrategia nacional para la Inteligencia Artificial

Yohann Ralle, el último ponente, es especialista en generación AI del Ministerio de Economía, Hacienda y Soberanía Industrial y Digital de Francia. Comenzó explicando su "fórmula mágica" para construir un LLM de vanguardia: potencia computacional + conjuntos de datos + investigación fundamental:

"En cuanto a potencia de cálculo, el gobierno francés ha invertido en un bien común digital, el superordenador Jean Zay, diseñado para servir a la comunidad AI . Ha permitido entrenar el modelo europeo multilingüe BLOOM ".Yohann Ralle, Especialista Generativo AI en el Ministerio de Economía, Finanzas y Soberanía Industrial y Digital de Francia.

"En cuanto a los conjuntos de datos, iniciativas como Agdatahub han ayudado a agregar, anotar y cualificar el aprendizaje y las pruebas data para desarrollar una AI eficiente y fiable, que también puede contribuir a la competitividad francesa. En cuanto a la investigación fundamental, la estrategia nacional ha ayudado a estructurar el ecosistema de investigación y desarrollo AI con la creación de los institutos 3IA, la financiación de contratos de doctorado, el lanzamiento de los proyectos IRT Saint Exupéry y SystemX, múltiples programas de formación de estudiantes y mucho más en toda Francia y Europa."

Generative AI Mesa redonda dirigida por Vincent Luciani, Director General de Artefact

¿Ha superado AI la prueba de Turing (es decir, ha alcanzado AI un nivel de inteligencia humana)?

Aunque la pregunta recibió respuestas dispares, el consenso general es que, aunque la inteligencia existe, la intencionalidad no.

Matthieu: "Creo que sí... Tienes la sensación de que hay alguien ahí, siempre que no preguntes por las fechas. Hay un lado temporal que no funciona".

Igor: "Mi pregunta es ¿por qué hiciste esa pregunta? Porque el test de Turing no es muy interesante para los negocios. Pero, en términos de interacción, sí, puedes decir que AI ha pasado la prueba".

Yohann: "La prueba de Turing es muy subjetiva. Existe un riesgo cuando atribuimos cualidades humanas a AI, cuando antropomorfizamos. Recordemos el caso del ingeniero de Google Blake Lemoine, que creía que el chatbot LaMDA con el que hablaba se había vuelto sintiente... El test de Turing es un ejercicio interesante, nada más".

Hanan: "En cuanto a ChatGPT, nos estamos acercando, pero aún no hemos llegado".

¿Es la llegada de ChatGPT una revolución, una evolución o parte de un continuo?

Igor: "Aunque el impacto a largo plazo de ChatGPT en los LLM aún no puede comprenderse del todo, con el tiempo surgirán nuevos usos para estas tecnologías que tendrán importantes implicaciones sociales. El debate es interesante, pero lo más importante son los artículos científicos reales que detallarán las mejoras de los LLM. Las aplicaciones prácticas actuales y potenciales de estos modelos no deben pasarse por alto ni subestimarse".

¿Cuáles son los casos de uso más prometedores para las empresas? ¿Bots, generación de imágenes?

Hanan: "Obviamente, los chatbots siempre han sido importantes en AI y seguirán siendo un caso de uso importante, porque ahora, con ChatGPT, puedes configurar uno en 48 horas conectándolo a una base de datos, es increíble. Otro caso de uso es la creación de agentes autónomos que puedan realizar tareas específicas sin intervención humana, como un agente de viajes que te reserve todas las entradas a hoteles y restaurantes para una visita a Italia."

Yohann: "Veo muchas oportunidades para los plugins impulsados por CGT, como Kayak o Booking. Creo que reestructurará el entorno digital, donde OpenAI agregará a los agregadores".

Igor: "Preveo la posibilidad de personalizar los LLM empresariales. Más allá de los data lakes, las empresas empezarán a entender cómo utilizar los data no estructurados y cómo generar valor real a partir de su data internamente con LLM privados. Al mismo tiempo, creo que veremos cambios drásticos en la forma de buscar y utilizar internet gracias a ChatGPT."

Vincent: Creo que se producirá una fusión de los data internos de las empresas y los LLM en una especie de "FAQ+ maestro" que podrá ser consultado por agentes de búsqueda o aumentados. El concepto de consulta está evolucionando: el día de mañana, ¿la gente comprará una o muchas palabras clave, o comprará un concepto? En publicidad, el objetivo siempre fue basarse en las personas, Audiencia; ahora, a medida que protegemos la información personal data, nos dirigimos hacia la publicidad basada en el contexto. Y eso puede dar lugar a una publicidad más interesante".

¿Cómo se utiliza hoy en día el método generativo AI en las organizaciones? ¿Influye en el empleo?

Matthieu: "Somos afortunados, una de nuestras ventajas competitivas es que tenemos AI en nuestro ADN. Fomentamos internamente el uso de herramientas más generativas. Nuestro equipo técnico utiliza Copilot para el desarrollo, y nuestros programadores utilizan tanto ChatGPT como Copilot. Somos más creativos con estas herramientas. En cuanto al empleo, estamos creciendo, así que tenemos previsto contratar a gente nueva... pero al mismo tiempo, cuando tenemos un gran software, podemos hacer más con equipos más pequeños."

Igor: "Siempre hemos trabajado con un equipo pequeño -siete u ocho personas- para alcanzar los mismos altos niveles de destreza técnica que Google, por ejemplo, donde los equipos son diez veces mayores. Nuestros pequeños equipos tienen un efecto totalmente desproporcionado. Es una idea falsa creer que se necesita un gran equipo para lograr grandes cosas".

Yohann: "Hace diez años, un estudio estadounidense afirmaba que el 47% de los puestos de trabajo se perderían debido a AI en 20 años, pero vemos que no es así. Según un estudio más reciente de la OCDE, se acercaría al 14%. Creo que deberíamos pensar en términos de tareas, no de puestos de trabajo. Como se menciona en un estudio reciente de OpenAI, entre el 80 y el 90% de los puestos de trabajo se verán afectados por la generativa AI , pero eso en realidad significa que el 90% de los empleados se verán afectados en el 10% de sus tareas. Lo interesante es que se está cuestionando la noción de profesiones que creíamos intocables por AI , como las del ámbito creativo, jurídico, financiero y otros. El gobierno francés ha creado Le LaborIA para ayudar a explorar estas cuestiones".

¿Cuáles son las limitaciones en torno a la soberanía y la normativa para estos modelos?

Hanan: "El primer límite se refiere a la Propiedad Intelectual (PI). Hoy en día, tenemos tres tipos de modelos: modelos públicos, como ChatGPT, donde la data que envías puede utilizarse con fines comerciales; modelos privados sin propietario de PI, como la API de Google en Lamba; y modelos de código abierto autoinstalados. Data La soberanía es un problema, ya que GPT y PaLM no son europeas, sino de propiedad estadounidense".

Yohann: "La normativa es un gran problema en Europa. Italia ha prohibido totalmente el uso de ChatGPT a la espera de que se investigue si la aplicación cumple la normativa de privacidad GDPR. OpenAI tiene que ser extremadamente clara sobre el uso que hace de los datos personales data presentando, por ejemplo, una cláusula de exención de responsabilidad en la que diga que utiliza datos personales data y permitiendo a los usuarios optar por no participar en la recopilación de data y borrar sus data. Otra cuestión relacionada con las limitaciones de los LLM son las alucinaciones: a menudo dan respuestas erróneas, lo que puede ser grave si la petición se refiere a un personaje público y el modelo genera una noticia "falsa" que puede perjudicar realmente a la persona en cuestión."

Vincent "¿Has estudiado la cuestión de la propiedad intelectual y los problemas planteados por la demanda de Getty Images contra Stability AI? Hay muchas preguntas sobre el raspado de Internet en busca de imágenes para entrenar modelos..."

Yohann: "Estamos pensando en ello. El código abierto podría ser una forma de crear bases de datos y conjuntos de datos limpios, libres de derechos de autor y que respeten la propiedad intelectual."

Matthieu: "Respecto a data personal y los productos: lo que permite que ChatGPT o Midjourney o PhotoRoom funcionen bien no es data personal , sino la opinión de los clientes."

Yohann: "La opinión de los usuarios es ideal, pero recopilarla es prohibitivamente caro en el caso de los LLM".

Igor: "¿Dónde está el dinero? Esa es mi pregunta. Todos los problemas que has planteado son técnicos, y no podemos resolverlos hasta que tengamos fondos para contratar ingenieros y poner en marcha un ecosistema, y sencillamente aún no estamos preparados."

Con cada vez más LLM en construcción, ¿cree que habrá una "guerra" de GPU?

Yohann: "Es un riesgo real. Ahora mismo, aquí hay un monopolio de NVIDIA, controlan el mercado y los precios. Desgraciadamente, no hay competidores reales en Europa. Es un recurso limitado por definición, un recurso escaso, así que es una batalla seria".

Matthieu: "La falta de disponibilidad de GPU limita gravemente no sólo nuestra productividad, sino el crecimiento de las empresas de toda Europa".

Igor: "Desde que empezamos como productores de hardware, ya nos enfrentamos a este problema en 2016... Hoy en día, hay personas que trabajan con nuestros competidores cuyo trabajo a tiempo completo es encontrar suficientes GPU para entrenar modelos... El mercado está explotando, pero la producción de chips no puede seguir el ritmo... en ninguna parte del mundo."

Hanan: "Inevitablemente habrá un cuello de botella en la GPU, pero podemos aprender a ser más eficientes, tenemos que serlo. Y tenemos que ver cómo podemos integrar el código abierto en nuestras empresas, no sólo cómo utilizar todas las últimas tecnologías."

¿Dónde ve más valor para el futuro? ¿Modelos de código abierto? ¿Los LLM?

Matthieu: "En PhotoRoom usamos código abierto, nos permite ir más rápido, desarrollar nuestra propia IP. Contamos con una amplia comunidad de Hugging Face en París que nos da un feedback esencial."

Igor: "Utilizamos LLM, pero no estamos casados con ese modelo de negocio. Podríamos usar código abierto. Lo importante es poder y saber reutilizar nuestro data propietario para entrenar futuros modelos. El objetivo es una industria que personalice estos modelos para otras empresas".

Yohann: "En cuanto a la evolución del código abierto frente al propietario ha impulsado la generativa AI. La comunidad de AI colaboró para que otros actores pudieran beneficiarse de esta investigación fundamental para construir sus propios modelos. Me pregunto si el rendimiento de estos modelos de código abierto no será inferior al de los modelos propietarios, pero eso puede cambiar. En cualquier caso, cabe preguntarse si Google no se arrepiente de haber abierto las puertas a su tecnología ChatGPT".

A la mesa redonda siguió una sesión de preguntas y respuestas en Audiencia , con un debate especialmente animado sobre la falta de mujeres en la tecnología. Yohann detalló varias de las medidas que está tomando el gobierno francés en materia de educación dirigidas específicamente a niñas y mujeres, mientras que Vincent habló de lo que está haciendo la Artefact School of Data, la iniciativa Women@Artefact y otras empresas tecnológicas para intentar corregir la situación.

También se plantearon preguntas sobre la inclusión de personas con autismo y otras discapacidades en el uso de los LLM; el problema de las alucinaciones en AI ; las medidas que las empresas tienen previsto adoptar para proteger el medio ambiente; y el papel de Europa frente a Francia en relación con el scraping de Internet para data. Para ver cómo respondieron los participantes a estas preguntas, vea la conferencia Repetición.

BLOG

BLOG