NIEUWS / AI TECHNOLOGIE

25 november 2020

Adviseurs van callcenters beginnen NLU te zien opkomen in hun dagelijks leven, waardoor ze de verzoeken van klanten makkelijker kunnen beantwoorden. Om dat te kunnen doen, moet een tool tegelijkertijd het verzoek van de klant en de kenmerken ervan kunnen herkennen, met andere woorden, een intentie en named-entities.

"OK Google, speel de Rolling Stones af op Spotify.", "Alexa, wat voor weer is het vandaag in Parijs?", "Siri, wie is de Franse president?".

Als je ooit stemassistenten hebt gebruikt, heb je indirect ook enkele Natural Language Understanding (NLU) processen gebruikt. Dezelfde logica is van toepassing op chatbotassistenten of het automatisch routeren van tickets bij klantenservice. NLU maakt al enige tijd deel uit van ons dagelijks leven en dat zal waarschijnlijk niet stoppen.

Door bijvoorbeeld de extractie van klantintentie te automatiseren, kan NLU ons helpen om de verzoeken van onze klanten sneller en nauwkeuriger te beantwoorden. Daarom is elke grote organisatie begonnen met de ontwikkeling van een eigen oplossing. Maar met alle bibliotheken en modellen die er op NLU-gebied bestaan en die allemaal claimen state-of-the-art of eenvoudig te behalen resultaten te hebben, is het soms ingewikkeld om je weg te vinden. Na het experimenteren met verschillende bibliotheken in onze NLU projecten op Artefact, wilden we onze resultaten delen en je helpen een beter begrip te krijgen van de huidige tools in NLU.

Wat is NLU?

Natural Language Understanding (NLU) wordt door Gartner gedefinieerd als "het begrijpen door computers van de structuur en betekenis van menselijke taal(bijv. Engels, Spaans, Japans), waardoor gebruikers met de computer kunnen communiceren door middel van natuurlijke zinnen". Met andere woorden, NLU is een subdomein van artificial intelligence dat de interpretatie van tekst mogelijk maakt door deze te analyseren, om te zetten in computertaal en een uitvoer te produceren in een voor mensen begrijpelijke vorm.

Als je goed kijkt naar hoe chatbots en virtuele assistenten werken, van jouw verzoek tot hun antwoord, is NLU één laag die jouw hoofdintentie en alle informatie die belangrijk is voor de machine eruit haalt, zodat deze jouw verzoek het beste kan beantwoorden. Stel dat je de klantenservice van je favoriete merk belt om te weten of je droomtas eindelijk beschikbaar is in jouw stad: NLU zal de assistent vertellen dat je een productbeschikbaarheidsverzoek hebt en in de productdatabase op zoek gaan naar het specifieke artikel om uit te zoeken of het beschikbaar is op de door jou gewenste locatie. Dankzij NLU hebben we een intentie, een productnaam en een locatie geëxtraheerd.

(Hierboven: illustratie van een klantintentie en verschillende entiteiten die uit conversatie worden gehaald)

Natuurlijke taal zit in de meeste bedrijven ingebakken op data en met de recente doorbraken op dit gebied, gezien de democratisering van de NLU-algoritmen, de toegang tot meer rekenkracht & meer data, zijn er veel NLU-projecten gelanceerd. Laten we eens kijken naar een van hen.

Projectpresentatie

Een typisch project waarbij NLU wordt gebruikt is, zoals eerder genoemd, het helpen van callcenteradviseurs bij het eenvoudiger beantwoorden van verzoeken van klanten tijdens het gesprek. Hiervoor zouden we twee verschillende taken moeten uitvoeren:

- De intentie van de klant tijdens het gesprek begrijpen (d.w.z. tekstclassificatie)

- Vang de belangrijke elementen op waarmee het verzoek van de klant kan worden beantwoord (d.w.z. named-entity recognition), bijvoorbeeld contractnummers, producttype, productkleur, enz.

Toen we voor het eerst keken naar de eenvoudige en kant-en-klare oplossingen voor deze twee taken, vonden we meer dan een dozijn frameworks, sommige ontwikkeld door de GAFAM, sommige door open-source platform contribuanten. Onmogelijk om te weten welke te kiezen voor onze use case, hoe elk van hen presteert op een concreet project en echte data, hier callcenter audiogesprekken omgezet in tekst. Daarom hebben we besloten om onze prestatiebenchmark te delen met enkele tips en voor- en nadelen voor elke oplossing die we hebben getest.

Het is belangrijk om op te merken dat deze benchmark is gedaan met Engelse data en getranscribeerde spraaktekst en daarom minder gebruikt kan worden als referentie voor andere talen of toepassingen die direct geschreven tekst gebruiken, bijv. chatbot-gebruiksgevallen.

Benchmark

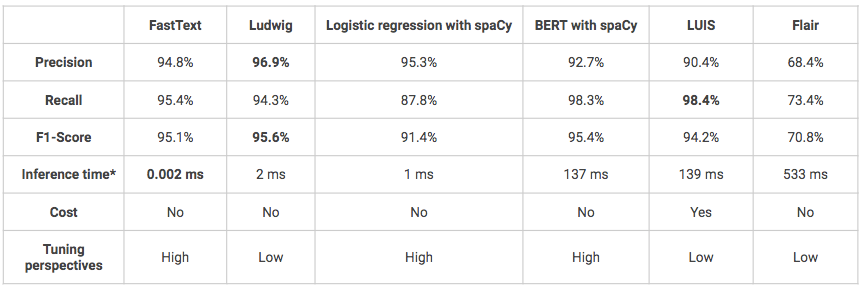

Opsporing van intenties

Het doel hier is om te kunnen detecteren wat de klant wil, zijn/haar intentie. Als een zin wordt gegeven, moet het model deze in de juiste klasse kunnen indelen, waarbij elke klasse overeenkomt met een vooraf gedefinieerde intentie. Als er meerdere klassen zijn, wordt het een multiklasse classificatietaak genoemd. Een intentie kan bijvoorbeeld zijn "wilProduct kopen" of "zoektInformatie". In ons geval hebben we 5 verschillende intenties en de volgende zes oplossingen werden gebruikt voor de benchmark:

- FastText: bibliotheek voor het efficiënt leren van woordrepresentaties en zinsclassificatie, gemaakt door Facebook's AI Research lab.

- Ludwig: een toolbox waarmee deep learning modellen kunnen worden getraind en getest zonder dat er code geschreven hoeft te worden, via de opdrachtregel of de programmatische API. De gebruiker hoeft alleen maar een CSV-bestand (of een pandas DataFrame met de programmatische API) op te geven met zijn data, een lijst met kolommen om te gebruiken als invoer en een lijst met kolommen om te gebruiken als uitvoer, Ludwig doet de rest.

- Logistische regressie met spaCy voorbewerking: klassieke logistische regressie met scikit-learn bibliotheek met aangepaste voorbewerking met spaCy bibliotheek (tokenisatie, lemmatisering, stopwoorden verwijderen).

- BERT met spaCy-leiding: spaCy modelpijplijnen die Hugging Face's transformatorenpakket omhullen om gemakkelijk toegang te krijgen tot geavanceerde transformatorarchitecturen zoals BERT.

- LUIS: Microsoft cloud API service die op maat gemaakte machine-learning intelligentie toepast op de conversatie, natuurlijke taal tekst van een gebruiker om intentie en entiteiten te voorspellen.

- Flair: een raamwerk voor geavanceerde NLP voor verschillende taken zoals named entity recognition (NER), part-of-speech tagging (PoS), sense disambiguation en classificatie.

De volgende modellen zijn allemaal getraind en getest op dezelfde datasets: 1600 uitingen voor training, 400 voor testen. De modellen zijn niet verfijnd, waardoor sommige modellen mogelijk betere prestaties leveren dan wat hieronder wordt gepresenteerd.

*Inferentietijd op lokale Macbook Air (1,6 GHz dual-core Intel Core i5-8 Go 1600 MHz DDR3 RAM).

- Over het algemeen behalen alle oplossingen goede of zelfs zeer goede resultaten (F1-score > 70%).

- Een van de ongemakken van Ludwig en LUIS is dat het erg "black-box" modellen zijn waardoor ze moeilijker te begrijpen en af te stellen zijn.

- LUIS is de enige geteste oplossing die niet open source is en dus veel duurder. Bovendien kan het gebruik van de Python API complex zijn omdat het in eerste instantie is ontworpen om te worden gebruikt via een klik-knop interface. Het kan echter een oplossing zijn die de voorkeur verdient als je je in de context van een project bevindt dat in productie wil gaan en waarvan de infrastructuur bijvoorbeeld op Azure is gebouwd, dan is de integratie van het model eenvoudiger.

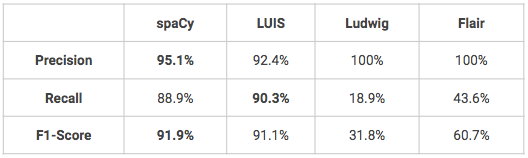

Entiteiten extractie

Het doel is om specifieke woorden te vinden en ze correct in te delen in vooraf gedefinieerde categorieën. Nadat je hebt gedetecteerd wat je klant wil doen, kan het zijn dat je meer informatie moet vinden in zijn/haar verzoek. Als een klant bijvoorbeeld iets wil kopen, wil je misschien weten om welk product het gaat en in welke kleur, of als een klant een product wil retourneren, wil je misschien weten op welke datum of in welke winkel de aankoop is gedaan. In ons geval hadden we het volgende gedefinieerd 16 aangepaste entiteiten9 productgerelateerde entiteiten (naam, kleur, type, materiaal, maat, ...) en aanvullende entiteiten met betrekking tot geografie en tijd. Voor intentiedetectie zijn verschillende oplossingen gebruikt om een benchmark te maken:

- spaCyEen open-source bibliotheek voor geavanceerde verwerking van natuurlijke taal in Python die verschillende functies biedt, waaronder Named Entity Recognition.

- LUIS: zie boven

- Ludwig: zie boven

- Flair: zie boven

De volgende modellen zijn allemaal getraind en getest op dezelfde datasets: 1600 uitingen voor training, 400 voor testen. De modellen zijn niet verfijnd, waardoor sommige modellen mogelijk betere prestaties leveren dan wat hieronder wordt gepresenteerd.

- Twee modellen presteren erg goed op het gebied van herkenning van aangepaste naamentiteiten, spaCy en LUIS. Ludwig en Flair zouden wat verfijning nodig hebben om betere resultaten te behalen, vooral in termen van terughalen.

- Een voordeel van LUIS is dat de gebruiker gebruik kan maken van een aantal geavanceerde functies voor entiteitherkenning, zoals descriptoren die hints geven dat bepaalde woorden en zinnen deel uitmaken van een entiteitdomeinvocabulaire (bijv.: kleurenvocabulaire = zwart, wit, rood, blauw, marineblauw, groen).

Conclusie

Van de oplossingen die zijn getest op onze dataset van callcenters, of het nu gaat om intentiedetectie of entiteitherkenning, springt er geen enkele uit qua prestaties. In onze ervaring moet de keuze van de ene oplossing boven de andere dus gebaseerd zijn op hun praktische bruikbaarheid en op basis van uw specifieke use case (gebruikt u Azure al, wilt u meer vrijheid om uw modellen te verfijnen...). Ter herinnering, we hebben de bibliotheken gebruikt zoals ze zijn om deze benchmark te produceren, zonder de modellen te verfijnen, dus de getoonde resultaten moeten worden genomen met een kleine terugblik en kunnen variëren bij een andere use case of met meer training data.

BLOG

BLOG