Autor

Autor

Autor

En la era digital actual, las organizaciones se enfrentan al reto de seguir el ritmo sin precedentes de generación de data y la plétora de sistemas empresariales y tecnologías digitales que recopilan todo tipo de data. A esto se une la necesidad de analizar rápida y eficazmente estos grandes volúmenes de data para generar ideas e inteligencia con el fin de maximizar su valor empresarial. Como resultado, las plataformas de big data se han convertido en una base esencial para que las organizaciones desplieguen eficientemente soluciones de data que proporcionen decisiones empresariales oportunas data y ventajas competitivas.

"Las soluciones de análisis e inteligenciaData proliferan en las organizaciones para permitir el crecimiento empresarial. Las organizaciones deben construir grandes plataformas data como bases sólidas para desplegar soluciones data a escala. Estas plataformas data deben estar diseñadas específicamente para el negocio, ya que sólo son tan buenas como el conocimiento y la inteligencia empresarial que permiten; y deben construirse para estar preparadas para el futuro, beneficiándose de los constantes avances en data servicios y tecnologías de infraestructura."Oussama Ahmad, Data Socio consultor de Artefact

Objetivos clave de la plataforma Big Data

Las plataformas Big data pretenden acabar con los silos de data e integrar los distintos tipos de fuentes de data necesarios para implantar soluciones avanzadas de análisis e inteligencia data . Proporcionan una infraestructura escalable y flexible para recopilar, almacenar y analizar grandes volúmenes de data procedentes de múltiples fuentes. Estas plataformas deben aprovechar los mejores servicios y tecnologías de gestión de data y cumplir tres objetivos clave:

Infraestructura de la plataforma Big Data

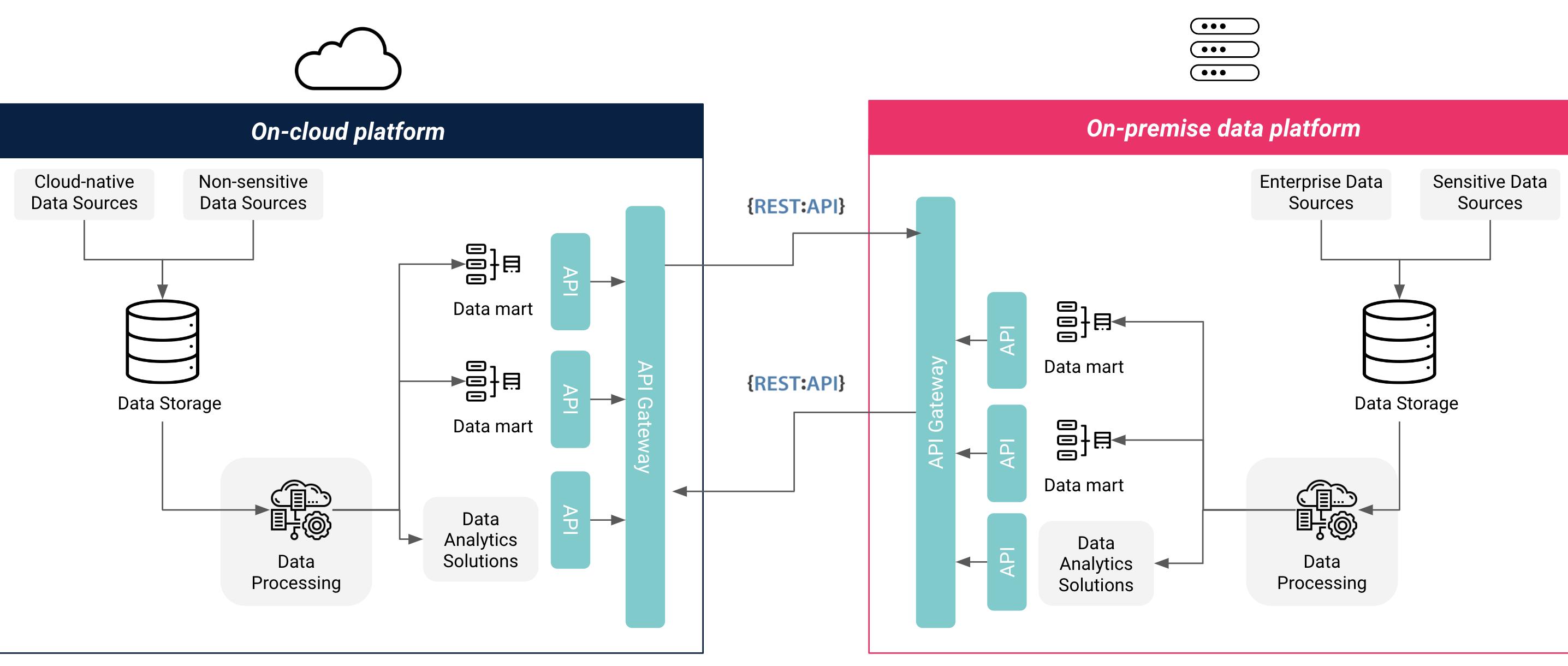

Existen varias opciones de infraestructura para una plataforma data big data: totalmente local, totalmente cloud o híbrida cloud, cada una con sus propias ventajas y retos. Las organizaciones deben tener en cuenta una serie de factores a la hora de elegir la opción de infraestructura más adecuada para su plataforma de big data , incluidos los requisitos de seguridad y residencia de data , las integraciones de fuentes de data , los requisitos de funcionalidad y escalabilidad, y los costes y plazos. Una arquitectura totalmente cloud Servicios costes más bajos y predecibles, servicios e integraciones listos para usar y escalabilidad rápida, pero carece de control sobre el hardware y puede no cumplir la normativa sobre privacidad y residencia de data . Una arquitectura totalmente local proporciona un control total sobre el hardware y la seguridad de data , suele cumplir las normativas de privacidad y residencia, pero incurre en costes más elevados y requiere una planificación a largo plazo para la escalabilidad. Una arquitectura híbrida cloud Servicios lo mejor de ambos mundos, facilitando la migración completa a la cloud en una fecha posterior, pero puede requerir una configuración más compleja.

Muchas organizaciones optan por una infraestructura híbrida para sus plataformas data big data debido a los requisitos organizativos de mantener data altamente sensibles (como data financieros y de clientes) en sus propios servidores, o debido a la falta de proveedores de servicios cloud (CSP) certificados por el gobierno que cumplan los requisitos locales de privacidad y residencia de data . Estas organizaciones también prefieren mantener las fuentes de data cloud o no sensibles en cloud para optimizar los costes de almacenamiento y recursos informáticos y aprovechar los servicios de análisis de data y aprendizaje automático listos para usar disponibles en los CSP. Otras organizaciones que no tienen requisitos organizativos o normativos para la residencia de data dentro de la Compañia o el país optan por una infraestructura totalmente cloud para acelerar el tiempo de implementación, optimizar los costes y disponer de recursos fácilmente escalables.

Figura 1: Infraestructura híbrida de Cloud y plataforma de Data local

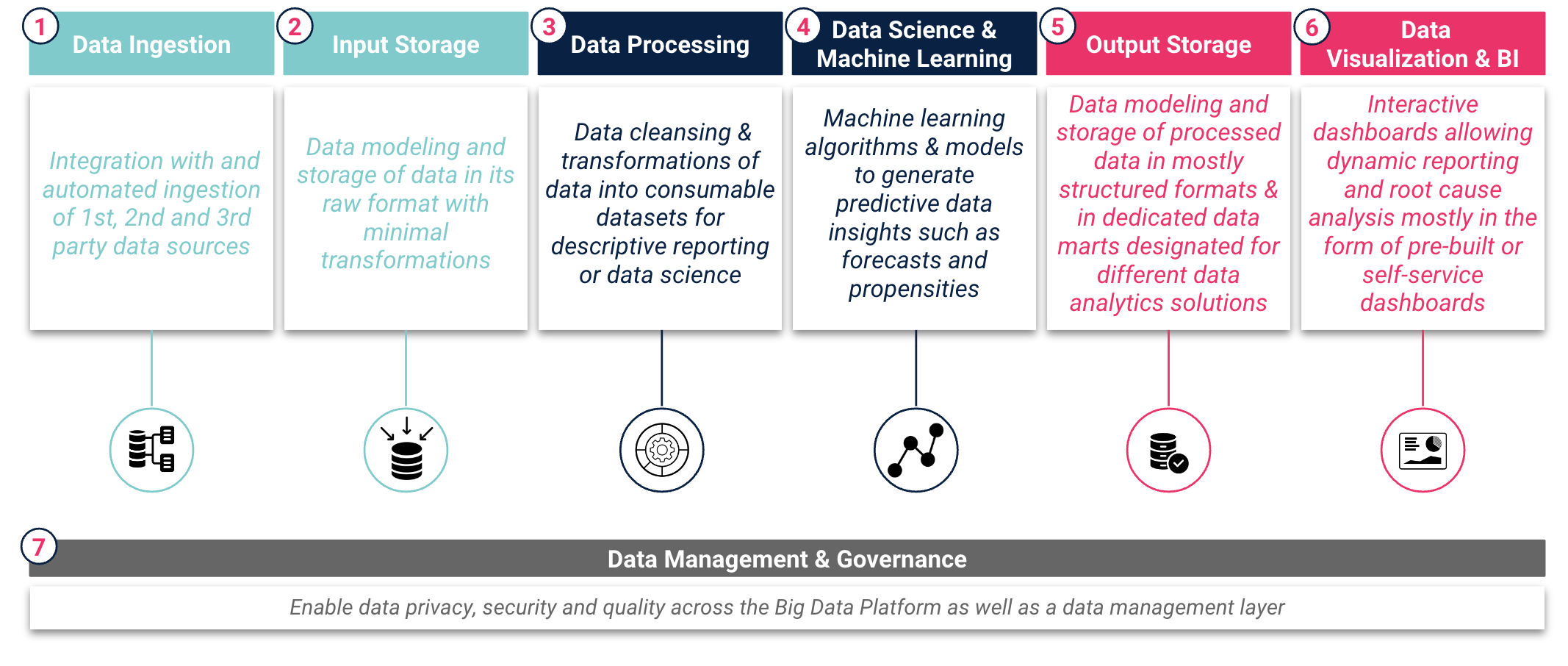

Una plataforma big data suele implicar la creación de siete capas principales que reflejan el ciclo de vida de data , desde "datos brutos data" a "información" y "conocimientos". Las organizaciones deben considerar cuidadosamente los servicios y herramientas apropiados requeridos para cada una de las capas con el fin de garantizar un flujo de datos sin fisuras y una generación eficiente de data insights. Estos servicios y herramientas deben desempeñar funciones clave en cada capa de la plataforma big data , como se muestra en la Figura 2: Capas de la plataforma big Data Data .

Figura 2: Plataforma Big Data Data Capas

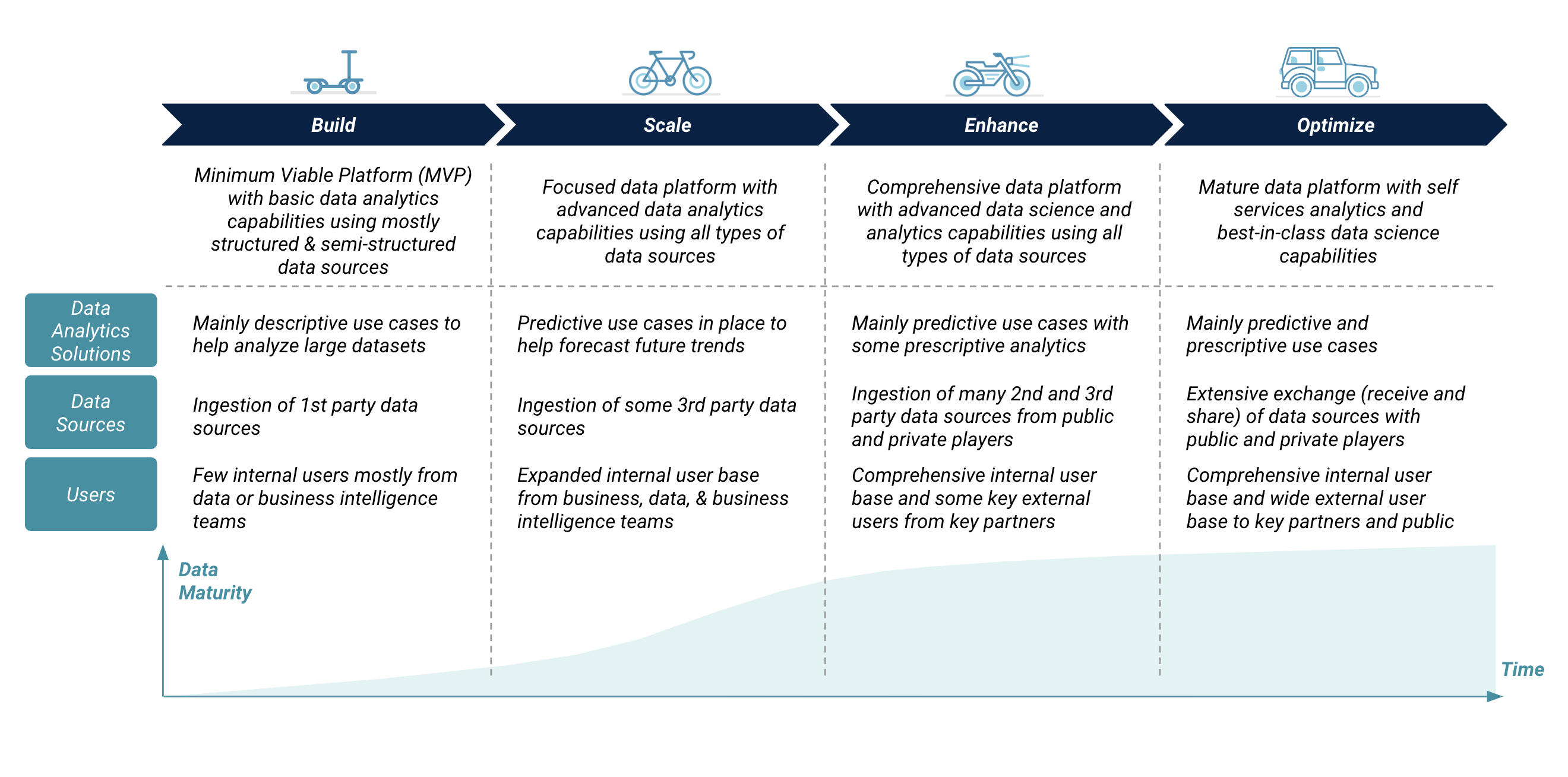

Evolución de la plataforma Big Data

El desarrollo de una plataforma big data debe evolucionar a través de varias etapas, comenzando con una plataforma mínima viable (MVP) y continuando con actualizaciones incrementales. Una organización debe sincronizar la evolución de su gran plataforma data con el aumento de los requisitos para una mayor y más rápida data comprensión e inteligencia para la toma de decisiones empresariales. Este aumento de los requisitos afecta a la complejidad de la gran plataforma data en términos de soluciones analíticas data , volúmenes y tipos de fuentes data y usuarios internos y externos. La evolución de la plataforma big data incluye la adición de más recursos de almacenamiento y computación, características y funcionalidades avanzadas, y mejoras en la seguridad y gestión de la plataforma.

Recuadro 3: Evolución de la plataforma Big Data

"Hemos visto que muchas organizaciones tienden a construir grandes plataformas data con funciones avanzadas e innecesarias desde el primer día, lo que aumenta el coste tecnológico de propiedad. El despliegue de una gran plataforma data debe comenzar con una plataforma mínima viable y evolucionar en función de las necesidades empresariales y tecnológicas. En las primeras fases de construcción de la plataforma, las organizaciones deben implantar una sólida capa de gobernanza y gestión data que garantice data la calidad, la privacidad, la seguridad y el cumplimiento de las leyes locales y regionales data ."Anthony Cassab, Data Director de consultoría en Artefact

Directrices para una plataforma Big Data preparada para el futuro

Una plataforma data debe construirse de acuerdo con unas directrices arquitectónicas clave para garantizar que esté preparada para el futuro, permitiendo una fácil escalabilidad de los recursos, la portabilidad a través de diferentes infraestructuras locales y cloud , la actualización y sustitución de los servicios, y la expansión de los mecanismos de recopilación e intercambio de data .

"Una plataforma adaptable y modular que pueda escalar a medida que evolucionan las necesidades empresariales es preferible a una plataforma "caja negra" que esté bien integrada pero permita una personalización limitada. Estas arquitecturas de plataforma pueden construirse total o parcialmente en la cloud para aprovechar las ventajas de la computación cloud , como la escalabilidad y la rentabilidad, al tiempo que se cumplen los requisitos de privacidad y seguridad de la normativa de protección de data ."Faisal Najmuddin, Data Director de Ingeniería en Artefact

En resumen, una gran plataforma data aporta múltiples beneficios a las organizaciones, como la centralización de fuentes data , la habilitación de soluciones analíticas avanzadas data y el acceso en toda la empresa a soluciones y fuentes analíticas data . Sin embargo, la implantación de una gran plataforma data conlleva una serie de decisiones estratégicas, como la elección de la infraestructura o infraestructuras adecuadas, la adopción de una arquitectura preparada para el futuro, la selección de servicios estándar y "migrables", la consideración cuidadosa de la normativa de protección data y, por último, la definición de un plan de evolución óptimo que esté estrechamente vinculado a los requisitos empresariales y maximice el retorno de la inversión data .

BLOG

BLOG